And then there is Cerebras, where scale‑up is essentially “inside one wafer” (one CS system), and scale‑out is multiple wafers connected via SwarmX + MemoryX over Ethernet. For scale-out, Cerebras connects multiple CS systems using the SwarmX interconnect plus MemoryX servers in a broadcast‑reduce topology. SwarmX does broadcast of weights to many wafers and reduction of gradients back into MemoryX, so that many CS‑3s train one large model in data‑parallel fashion. CS‑3 supports scale‑out clusters of up to 2,048 CS‑3 systems, with low‑latency RDMA‑over‑Ethernet links carrying only activations/gradients between wafers while keeping the bulk of traffic on‑wafer.

Array

(

[content] =>

[params] => Array

(

[0] => /forum/threads/cisco-launched-its-silicon-one-g300-ai-networking-chip-in-a-move-that-aims-to-compete-with-nvidia-and-broadcom.24521/page-2

)

[addOns] => Array

(

[DL6/MLTP] => 13

[Hampel/TimeZoneDebug] => 1000070

[SV/ChangePostDate] => 2010200

[SemiWiki/Newsletter] => 1000010

[SemiWiki/WPMenu] => 1000010

[SemiWiki/XPressExtend] => 1000010

[ThemeHouse/XLink] => 1000970

[ThemeHouse/XPress] => 1010570

[XF] => 2030970

[XFI] => 1060170

)

[wordpress] => /var/www/html

)

Guests have limited access.

Join our community today!

Join our community today!

You are currently viewing SemiWiki as a guest which gives you limited access to the site. To view blog comments and experience other SemiWiki features you must be a registered member. Registration is fast, simple, and absolutely free so please, join our community today!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Cisco launched its Silicon One G300 AI networking chip in a move that aims to compete with Nvidia and Broadcom.

- Thread starter Daniel Nenni

- Start date

curious how Cerebras handles large memory access. No matter how much SRAM they have on chips, it's no where near what HBM providesAnd then there is Cerebras, where scale‑up is essentially “inside one wafer” (one CS system), and scale‑out is multiple wafers connected via SwarmX + MemoryX over Ethernet. For scale-out, Cerebras connects multiple CS systems using the SwarmX interconnect plus MemoryX servers in a broadcast‑reduce topology. SwarmX does broadcast of weights to many wafers and reduction of gradients back into MemoryX, so that many CS‑3s train one large model in data‑parallel fashion. CS‑3 supports scale‑out clusters of up to 2,048 CS‑3 systems, with low‑latency RDMA‑over‑Ethernet links carrying only activations/gradients between wafers while keeping the bulk of traffic on‑wafer.

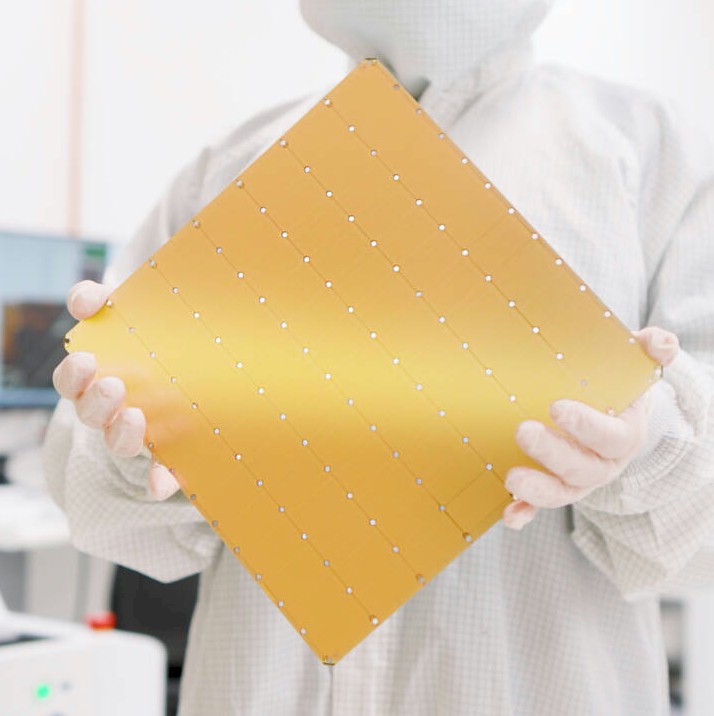

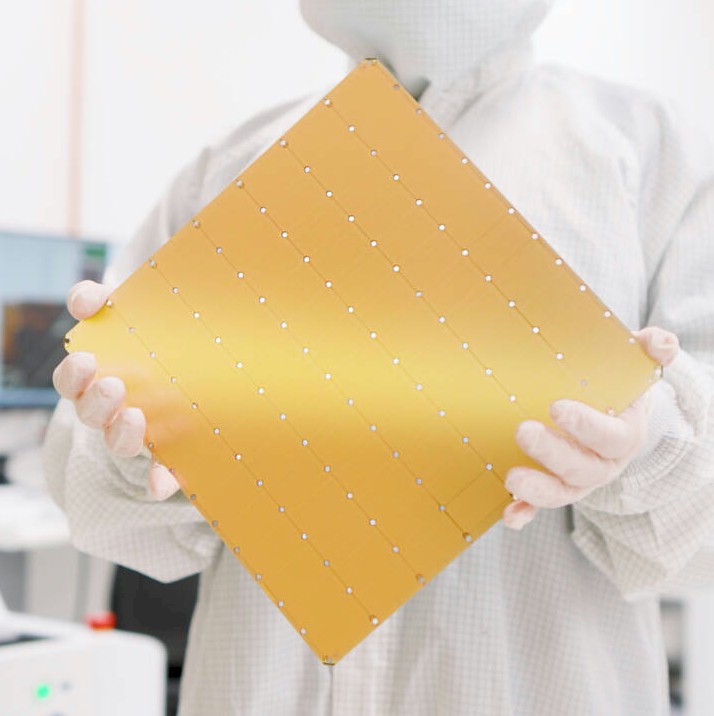

Cerebras uses dedicated servers, called MemoryX servers, which are SwarmX fabric-connected to the WSE-3 nodes. The MemoryX configuration can include up to 1.2PB of shared memory storage, consisting of DDR5 and Flash tiers. There is 44GB of SRAM on each WSE-3, and the SRAM has far lower latency and fabric latency than any HBM.curious how Cerebras handles large memory access. No matter how much SRAM they have on chips, it's no where near what HBM provides

do they have system that actually goes to the PB connection for training? SRAM along, while seems to be big, is far from enough. Perhaps their position is like Groq's inference in AI worldCerebras uses dedicated servers, called MemoryX servers, which are SwarmX fabric-connected to the WSE-3 nodes. The MemoryX configuration can include up to 1.2PB of shared memory storage, consisting of DDR5 and Flash tiers. There is 44GB of SRAM on each WSE-3, and the SRAM has far lower latency and fabric latency than any HBM.

Yes.do they have system that actually goes to the PB connection for training? SRAM along, while seems to be big, is far from enough. Perhaps their position is like Groq's inference in AI world

I'm not a big fan of TP Morgan for technical understanding, but Cerebras people provided the information and explanations in the article. However, the article is out of date, and Cerebras also does inference now. Claimed to be the world's fastest.

Cerebras Goes Hyperscale With Third Gen Waferscale Supercomputers

It is a pity that we can’t make silicon wafers any larger than 300 millimeters in diameter. If there were 450 millimeter diameter silicon wafers, as we

www.nextplatform.com

www.nextplatform.com

Inference - Cerebras

Cerebras inference - the fastest inference API for generative AI

www.cerebras.ai

www.cerebras.ai

HPC requirement is different from training. Inference makes sense. Wish we have more trustworthy independent BM as this part of industry maturesYes.

I'm not a big fan of TP Morgan for technical understanding, but Cerebras people provided the information and explanations in the article. However, the article is out of date, and Cerebras also does inference now. Claimed to be the world's fastest.

Cerebras Goes Hyperscale With Third Gen Waferscale Supercomputers

It is a pity that we can’t make silicon wafers any larger than 300 millimeters in diameter. If there were 450 millimeter diameter silicon wafers, as wewww.nextplatform.com

Inference - Cerebras

Cerebras inference - the fastest inference API for generative AIwww.cerebras.ai

If/when it happens -- not by any means certain yet, it's not even been defined! -- coherent-lite will be mainly targeted at scale-across, scale-out is more likely to use standard 1600G DD, DCI will use ZR pluggable.coherent-lite is likely to cover both scale out and across. as for CPO, indeed the overall DC power saving from CPO is very limited. Perhaps the world will continue to partition to NV and Google approach, just like GPU and TPU

Cisco Takes On Broadcom, Nvidia For Fat AI Datacenter Interconnects

Wide area networks and datacenter interconnects, or DCIs, as we have known them for the past decade or so are nowhere beefy enough or fast enough to take

www.nextplatform.com

www.nextplatform.com

CPO rollout is likely to stay limited until we get to the point where getting the high-speed data to pluggables becomes impossible -- even with flyover cables, which 448G SERDES will use -- because of loss budgets. At which point there's no choice except moving to CPO, but that's certainly still several years away, which means not this generation or even the next one.

Last edited:

You misread the NextPlatform article, though I'm not surprised considering Morgan's flamboyant writing style calling the WSE-3 systems "supercomputers". That's nonsense in the traditional sense. Cerebras can't do traditional HPC, these are dedicated AI systems with processor cores completely focused on AI instructions.HPC requirement is different from training. Inference makes sense. Wish we have more trustworthy independent BM as this part of industry matures

Cerebras does training of very large models, but I don't think any of the big cloud companies have taken the bait yet. Cerebras seems to be focusing on sovereign AI, like G42, and the big pharma companies.With the CS-3 machines, there are now options for 24 TB and 36 TB for enterprises and 120 TB and 1,200 TB for hyperscalers, which provides 480 billion and 720 billion parameters of storage as the top end of the enterprise scale and 2.4 trillion or 24 trillion for the hyperscalers. Importantly, all of this MemoryX memory can be scaled independently from the compute – something you cannot do with any GPUs or even Nvidia’s Grace-Hopper superchip hybrid, which has static memory configurations as well.

I think they have gone pretty big into inference as well, especially on the fast, low latency end. They have announced deals to provide inference to both OpenAI, Perplexity and Mistral, but I'm pretty sure that they had to do the heavy lifting, by building and operating their own data centers (at least 6 of them globally), and selling services / tokens to the models companies. Seems like the only way to really build AI chips anymore, is to build and operate your own data centers (unless you're NVIDIA). That's the only way you can enough real world operating experience to do data-center level AI inference system co-optimization.Cerebras does training of very large models, but I don't think any of the big cloud companies have taken the bait yet. Cerebras seems to be focusing on sovereign AI, like G42, and the big pharma companies.

Simple case below for 2024 - 2024 was all about fitting large dense models into memory. 2025 has gotten far more complicated with MoE and bunches of "smarter" but smaller expert model-ettes activating, then coordinating results back to all experts. Add in separating pre-fill and decode to different groups of processors and building and managing a KV cache. Not sure how they do the 2025-2026 edition.Cerebras uses dedicated servers, called MemoryX servers, which are SwarmX fabric-connected to the WSE-3 nodes. The MemoryX configuration can include up to 1.2PB of shared memory storage, consisting of DDR5 and Flash tiers. There is 44GB of SRAM on each WSE-3, and the SRAM has far lower latency and fabric latency than any HBM.

If the model fits on one wafer

• A CS‑3 has 44 GB of on‑chip SRAM across the wafer; for many production LLMs, all weights can be placed on‑wafer for inference.

• In that regime you don’t need weight streaming at runtime: parameters sit in SRAM next to the cores, so inference runs purely on‑wafer without repeatedly fetching weights from external memory.

• This is where Cerebras reports 10× faster LLM inference vs GPU clusters, driven by much higher effective memory bandwidth and no HBM/PCIe hops.

If the model is larger than one wafer

• For very large models whose weights exceed 44 GB per wafer, Cerebras can reuse the same weight‑streaming mechanism as in training:

• Weights reside in external MemoryX.

• For each layer (or group of layers), weights are streamed onto the wafer; activations stay on‑wafer; results move forward layer‑by‑layer.

• Latency is hidden the same way as in training: by overlapping weight transfers with compute and by exploiting coarse‑ and fine‑grained parallelism on the mesh.

Scaling out inference

• Multiple CS systems can serve inference together using SwarmX plus MemoryX, similar to training:

• MemoryX holds one or more model copies; SwarmX broadcasts weights (if streaming is used) and aggregates any needed results.

• For many inference workloads, the preferred pattern is replicated models across CS‑3s, each handling its own request stream, so most traffic stays local to each wafer and SwarmX is used more for management than for per‑token communication.

Agreed. That's why I posted their inferencing link.I think they have gone pretty big into inference as well, especially on the fast, low latency end. They have announced deals to provide inference to both OpenAI, Perplexity and Mistral, but I'm pretty sure that they had to do the heavy lifting, by building and operating their own data centers (at least 6 of them globally), and selling services / tokens to the models companies. Seems like the only way to really build AI chips anymore, is to build and operate your own data centers (unless you're NVIDIA). That's the only way you can enough real world operating experience to do data-center level AI inference system co-optimization.

Cerebras has announced a cloud computing service, which is mostly about inference, but being a private company they don't break down revenue sources. I suspect a significant driver for their cloud services are that Cerebras systems probably have some very different operations and support requirements from more common x86 and Arm based servers and industry networks. Especially if something goes wrong.

Just saw this today - specific usage of Cerebras where the fast token rate and minimal latency can eventually sell at premium. I imagine both model and data centers were tuned for lowest TCO (fitting model into hardware) that still gives those token rates/latency.Cerebras has announced a cloud computing service, which is mostly about inference, but being a private company they don't break down revenue sources. I

Introducing GPT‑5.3‑Codex‑Spark

An ultra-fast model for real-time coding in Codex.

Last edited:

There's no doubt that for specific (inference?) tasks which map well onto the Cerebras architecture (*enormous* local memory bandwidth and low-latency comms between processing engines on the WSE) there's nothing to touch it for performance, and probably not even for cost per token.Just saw this today - specific usage of Cerebras where the fast token rate and minimal latency can eventually sell at premium. I imagine both model and data centers were tuned for lowest TCO (fitting model into hardware) that still gives those token rates/latency.

Introducing GPT‑5.3‑Codex‑Spark

An ultra-fast model for real-time coding in Codex.

The $64M dollar question is -- what fraction of the total AI market is this? 10%? 1%? 0.1%? Anyone know?

Cerebras silicon and software, IMO, can be used to solve virtually any AI training or inference problem with a large enough configuration. (It is not a general purpose system.) The question is, how much of the market will bet on a very proprietary full-stack silicon to software solution from a privately-held company with only modest revenue and a huge (by comparison) valuation? Will any hyperscaler companies make a billion dollar investment in Cerebras systems without buying the company? (I doubt it.) US national labs will experiment with them (Sandia National Labs has already), big pharma companies have, but IMO this is more about user companies' willingness to invest than what fraction of the market has workloads applicable to Cerebras's architecture and implementation.There's no doubt that for specific (inference?) tasks which map well onto the Cerebras architecture (*enormous* local memory bandwidth and low-latency comms between processing engines on the WSE) there's nothing to touch it for performance, and probably not even for cost per token.

The $64M dollar question is -- what fraction of the total AI market is this? 10%? 1%? 0.1%? Anyone know?

A question I have is: will the Cerebras wafer-scale strategy be as compelling with a High-NA EUV lithography process as it is with TSMC 5nm process?

That wasn't what I meant -- no doubt the Cerebras solution *can* solve any AI problem, but once it doesn't fit into the on-wafer memory/NPU array any more the latency will suddenly go through the roof and the performance will take a dive, and when this happens I doubt it'll be competitive with the massive-volume commercial non-waferscale solutions (Nvidia, Google, whatever...). But if the problem *does* fit into the on-wafer memory/NPU array it'll probably wipe the floor with the more conventional competition.Cerebras silicon and software, IMO, can be used to solve virtually any AI training or inference problem with a large enough configuration. (It is not a general purpose system.) The question is, how much of the market will bet on a very proprietary full-stack silicon to software solution from a privately-held company with only modest revenue and a huge (by comparison) valuation? Will any hyperscaler companies make a billion dollar investment in Cerebras systems without buying the company? (I doubt it.) US national labs will experiment with them (Sandia National Labs has already), big pharma companies have, but IMO this is more about user companies' willingness to invest than what fraction of the market has workloads applicable to Cerebras's architecture and implementation.

A question I have is: will the Cerebras wafer-scale strategy be as compelling with a High-NA EUV lithography process as it is with TSMC 5nm process?

So what I was asking was -- does anyone know what fraction of the AI market falls below this critical "fits-on-the-wafer" size threshold? Because that pretty much says how much of the AI market Cerebras can capture... ;-)

P.S. However successful Cerebra are with 5nm there's no reason to think this will change massively with high-NA -- their costs go up (as does the "does-it-fit?" crossover point) but so do everyone else's. Unless the size of the problems goes up so much than almost none of them fit on a wafer any more, which is quite possible... ;-)

Last edited:

This isn't correct. The Cerebras architecture uses a cache-like strategy which hides latency, similar to caching in CPUs.That wasn't what I meant -- no doubt the Cerebras solution *can* solve any AI problem, but once it doesn't fit into the on-wafer memory/NPU array any more the latency will suddenly go through the roof and the performance will take a dive, and when this happens I doubt it'll be competitive with the massive-volume commercial non-waferscale solutions (Nvidia, Google, whatever...). But if the problem *does* fit into the on-wafer memory/NPU array it'll probably wipe the floor with the more conventional competition.

That might be what they claim but I don't really believe it -- what they mean is that they *try* to hide it just like more conventional AI architectures *try* to hide NPU-NPU or rack-to-rack latency, but this only works up to a point and not in all cases.This isn't correct. The Cerebras architecture uses a cache-like strategy which hides latency, similar to caching in CPUs.

If you want to compare it to caching in CPUs, then you always see big latency/speed steps when the problem goes from fitting in L1 to L2 and L3. With conventional NPUs the fastest memory is L1 on-chip (very small), then in-package HBM (L2) is much bigger but also much slower, then you have the (much longer) delay between tightly-coupled NPUs on the same PCB, then bigger delays to PCBs in the same rack, then bigger still rack-to-rack or row-to-row.

Cerebras has a lot fewer levels; there's a huge chunk of on-chip SRAM on the wafer which is almost as fast as conventional L1 but far bigger, this also tries to do the job of HBM but is much smaller (but also much faster) -- also the NPU-NPU delays on the wafer are a lot smaller than on PCB or within a rack. So anything that fits on the wafer (especially RAM) is likely to be a lot faster than a conventional architecture -- but as soon as you go past this the speed/latency falls off a cliff, because HBM has much more RAM capacity. That means jobs that are too big for the Cerebras RAM/NPU but small enough to fit in tightly-coupled HBM will very likely be faster on conventional architectures. If it won't fit in local HBM either then they're probably both similar.

Yes there are things you can do with algorithms and model updates to try and hide latency, these work in some cases but not all.

It shouldn't be at all surprising that two very different architectures have very different performance depending on the problem they're trying to solve, that's the nature of the beast. Cerebras certainly have a niche where they perform exceptionally well for both speed and cost-per-token, but there are also other applications where they don't -- and here they'll lose out to the massive economies of scale that companies like Nvidia and Google have, so even if performance is competitive -- which I doubt is the case, but let's not assume that -- cost-per-token certainly won't be.

Last edited:

Read how it works for yourself. I have long experience with multi-node scaling architectures and strategies, and what they say makes sense for AI training.That might be what they claim but I don't really believe it -- what they mean is that they *try* to hide it just like more conventional AI architectures *try* to hide NPU-NPU or rack-to-rack latency, but this only works up to a point and not in all cases.

If you want to compare it to caching in CPUs, then you always see big latency/speed steps when the problem goes from fitting in L1 to L2 and L3. With conventional NPUs the fastest memory is L1 on-chip (very small), then in-package HBM (L2) is much bigger but also much slower, then you have the (much longer) delay between tightly-coupled NPUs on the same PCB, then bigger delays to PCBs in the same rack, then bigger still rack-to-rack or row-to-row.

Cerebras has a lot fewer levels; there's a huge chunk of on-chip SRAM on the wafer which is almost as fast as conventional L1 but far bigger, this also tries to do the job of HBM but is much smaller (but also much faster) -- also the NPU-NPU delays on the wafer are a lot smaller than on PCB or within a rack. So anything that fits on the wafer (especially RAM) is likely to be a lot faster than a conventional architecture -- but as soon as you go past this the speed/latency falls off a cliff, because HBM has much more RAM capacity. That means jobs that are too big for the Cerebras RAM/NPU but small enough to fit in tightly-coupled HBM will very likely be faster on conventional architectures. If it won't fit in local HBM either then they're probably both similar.

Yes there are things you can do with algorithms and model updates to try and hide latency, these work in some cases but not all.

It shouldn't be at all surprising that two very different architectures have very different performance depending on the problem they're trying to solve, that's the nature of the beast. Cerebras certainly have a niche where they perform exceptionally well for both speed and cost-per-token, but there are also other applications where they don't -- and here they'll lose out to the massive economies of scale that companies like Nvidia and Google have, so even if performance is competitive -- which I doubt is the case, but let's not assume that -- cost-per-token certainly won't be.

The Complete Guide to Scale-Out on Cerebras Wafer-Scale Clusters - Cerebras

Cerebras’ appliance mode offers users easy linear scaling on Cerebras Wafer-Scale Clusters of up to 192 CS-2 systems.

www.cerebras.ai

www.cerebras.ai

I've read how it works for myself thank you very much, both there and elsewhere... ;-)Read how it works for yourself. I have long experience with multi-node scaling architectures and strategies, and what they say makes sense for AI training.

The Complete Guide to Scale-Out on Cerebras Wafer-Scale Clusters - Cerebras

Cerebras’ appliance mode offers users easy linear scaling on Cerebras Wafer-Scale Clusters of up to 192 CS-2 systems.www.cerebras.ai

That is of course a carefully tailored Cerebras POV intended to help sell their systems, and is undoubtedly correct as far as it goes. But then you wouldn't expect them to point out the cases where they lose out, would you?

Other more independent analyses have pointed out exactly the kind of issues I described -- which should come as no surprise to anyone. Given two systems with completely different architectures there will always be cases where one will beat the other -- sometimes massively -- and vice versa, usually when the problem crosses boundaries in one of the architectures.

Algorithms can try and hide this -- sometimes more successfully than others -- but then a different application may expose the differences again.

Cerebra's achievement in finally getting wafer-scale processing to work after so many failures in the past is to be applauded, it's a big accomplishment, and it brings some big advantages with it for the right problems.

But it's not a cure-all for all problems even in a constrained area like AI, there are also cases where their advantages disappear or are even reversed, especially when overall cost is concerned -- their WSI is very expensive (though hugely capable) with specialist hardware and is made in small volumes, in contrast with the much more standard massive-volume approach of their competitors.

They deserve to win business in areas where their solution excels -- my question was, how much of the enormous AI market is this Cerebras-friendly niche?

Can you point to one of these analyses?I've read how it works for myself thank you very much, both there and elsewhere... ;-)

That is of course a carefully tailored Cerebras POV intended to help sell their systems, and is undoubtedly correct as far as it goes. But then you wouldn't expect them to point out the cases where they lose out, would you?

Other more independent analyses have pointed out exactly the kind of issues I described -- which should come as no surprise to anyone. Given two systems with completely different architectures there will always be cases where one will beat the other -- sometimes massively -- and vice versa, usually when the problem crosses boundaries in one of the architectures.

I think you've already decided the answer you will believe, so it looks like asking this question here is not going to be productive. Do you believe GPUs, even with tensor cores and specialized interconnects like NVLink, are an optimal answer for AI training?Algorithms can try and hide this -- sometimes more successfully than others -- but then a different application may expose the differences again.

Cerebra's achievement in finally getting wafer-scale processing to work after so many failures in the past is to be applauded, it's a big accomplishment, and it brings some big advantages with it for the right problems.

But it's not a cure-all for all problems even in a constrained area like AI, there are also cases where their advantages disappear or are even reversed, especially when overall cost is concerned -- their WSI is very expensive (though hugely capable) with specialist hardware and is made in small volumes, in contrast with the much more standard massive-volume approach of their competitors.

They deserve to win business in areas where their solution excels -- my question was, how much of the enormous AI market is this Cerebras-friendly niche?