And then there is Cerebras, where scale‑up is essentially “inside one wafer” (one CS system), and scale‑out is multiple wafers connected via SwarmX + MemoryX over Ethernet. For scale-out, Cerebras connects multiple CS systems using the SwarmX interconnect plus MemoryX servers in a broadcast‑reduce topology. SwarmX does broadcast of weights to many wafers and reduction of gradients back into MemoryX, so that many CS‑3s train one large model in data‑parallel fashion. CS‑3 supports scale‑out clusters of up to 2,048 CS‑3 systems, with low‑latency RDMA‑over‑Ethernet links carrying only activations/gradients between wafers while keeping the bulk of traffic on‑wafer.

Array

(

[content] =>

[params] => Array

(

[0] => /forum/threads/cisco-launched-its-silicon-one-g300-ai-networking-chip-in-a-move-that-aims-to-compete-with-nvidia-and-broadcom.24521/page-2

)

[addOns] => Array

(

[DL6/MLTP] => 13

[Hampel/TimeZoneDebug] => 1000070

[SV/ChangePostDate] => 2010200

[SemiWiki/Newsletter] => 1000010

[SemiWiki/WPMenu] => 1000010

[SemiWiki/XPressExtend] => 1000010

[ThemeHouse/XLink] => 1000970

[ThemeHouse/XPress] => 1010570

[XF] => 2030871

[XFI] => 1060170

)

[wordpress] => /var/www/html

)

Guests have limited access.

Join our community today!

Join our community today!

You are currently viewing SemiWiki as a guest which gives you limited access to the site. To view blog comments and experience other SemiWiki features you must be a registered member. Registration is fast, simple, and absolutely free so please, join our community today!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Cisco launched its Silicon One G300 AI networking chip in a move that aims to compete with Nvidia and Broadcom.

- Thread starter Daniel Nenni

- Start date

curious how Cerebras handles large memory access. No matter how much SRAM they have on chips, it's no where near what HBM providesAnd then there is Cerebras, where scale‑up is essentially “inside one wafer” (one CS system), and scale‑out is multiple wafers connected via SwarmX + MemoryX over Ethernet. For scale-out, Cerebras connects multiple CS systems using the SwarmX interconnect plus MemoryX servers in a broadcast‑reduce topology. SwarmX does broadcast of weights to many wafers and reduction of gradients back into MemoryX, so that many CS‑3s train one large model in data‑parallel fashion. CS‑3 supports scale‑out clusters of up to 2,048 CS‑3 systems, with low‑latency RDMA‑over‑Ethernet links carrying only activations/gradients between wafers while keeping the bulk of traffic on‑wafer.

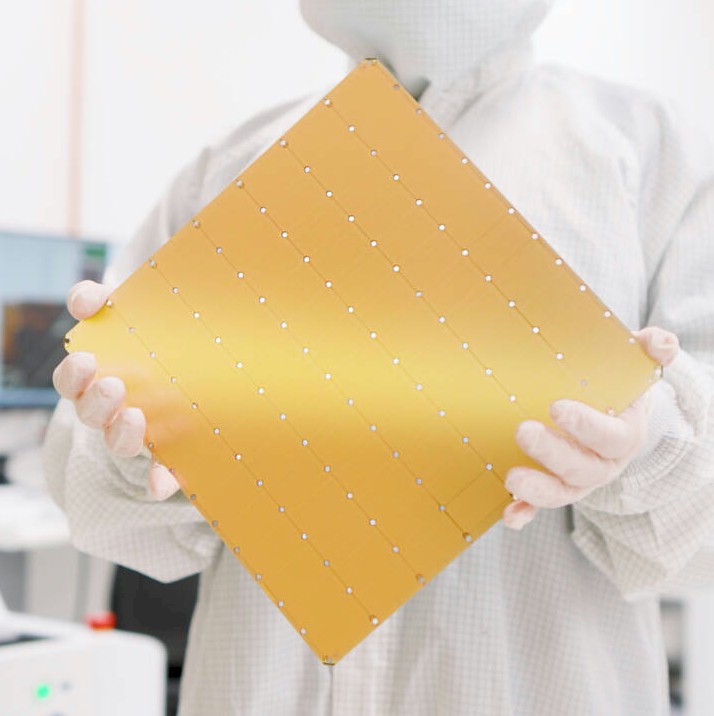

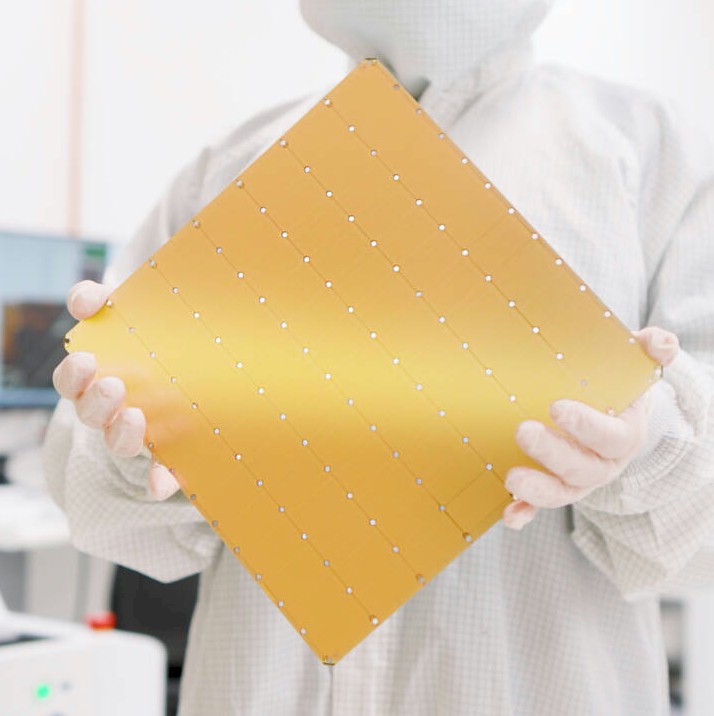

Cerebras uses dedicated servers, called MemoryX servers, which are SwarmX fabric-connected to the WSE-3 nodes. The MemoryX configuration can include up to 1.2PB of shared memory storage, consisting of DDR5 and Flash tiers. There is 44GB of SRAM on each WSE-3, and the SRAM has far lower latency and fabric latency than any HBM.curious how Cerebras handles large memory access. No matter how much SRAM they have on chips, it's no where near what HBM provides

do they have system that actually goes to the PB connection for training? SRAM along, while seems to be big, is far from enough. Perhaps their position is like Groq's inference in AI worldCerebras uses dedicated servers, called MemoryX servers, which are SwarmX fabric-connected to the WSE-3 nodes. The MemoryX configuration can include up to 1.2PB of shared memory storage, consisting of DDR5 and Flash tiers. There is 44GB of SRAM on each WSE-3, and the SRAM has far lower latency and fabric latency than any HBM.

Yes.do they have system that actually goes to the PB connection for training? SRAM along, while seems to be big, is far from enough. Perhaps their position is like Groq's inference in AI world

I'm not a big fan of TP Morgan for technical understanding, but Cerebras people provided the information and explanations in the article. However, the article is out of date, and Cerebras also does inference now. Claimed to be the world's fastest.

Cerebras Goes Hyperscale With Third Gen Waferscale Supercomputers

It is a pity that we can’t make silicon wafers any larger than 300 millimeters in diameter. If there were 450 millimeter diameter silicon wafers, as we

www.nextplatform.com

www.nextplatform.com

Inference - Cerebras

Cerebras inference - the fastest inference API for generative AI

www.cerebras.ai

www.cerebras.ai

HPC requirement is different from training. Inference makes sense. Wish we have more trustworthy independent BM as this part of industry maturesYes.

I'm not a big fan of TP Morgan for technical understanding, but Cerebras people provided the information and explanations in the article. However, the article is out of date, and Cerebras also does inference now. Claimed to be the world's fastest.

Cerebras Goes Hyperscale With Third Gen Waferscale Supercomputers

It is a pity that we can’t make silicon wafers any larger than 300 millimeters in diameter. If there were 450 millimeter diameter silicon wafers, as wewww.nextplatform.com

Inference - Cerebras

Cerebras inference - the fastest inference API for generative AIwww.cerebras.ai

If/when it happens -- not by any means certain yet, it's not even been defined! -- coherent-lite will be mainly targeted at scale-across, scale-out is more likely to use 800ZR/1600ZR coherent.coherent-lite is likely to cover both scale out and across. as for CPO, indeed the overall DC power saving from CPO is very limited. Perhaps the world will continue to partition to NV and Google approach, just like GPU and TPU

CPO rollout is likely to stay limited until we get to the point where getting the high-speed data to pluggables becomes impossible -- even with flyover cables, which 448G will use -- because of loss budgets. At which point there's no choice except moving to CPO, but that's certainly still several years away, which means not this generation or even the next one.