Open Source bi-weekly convo w/ Bill Gurley and Brad Gerstner on all things tech, markets, investing & capitalism. This week, Brad and Clark Tang sit down with Jensen Huang, founder & CEO of NVIDIA, for a sweeping deep dive on the new era of AI. From the $100B partnership with OpenAI to the rise of AI factories, sovereign AI, and protecting the American Dream—this episode explores how accelerated computing is reshaping the global economy. NVIDIA, OpenAI, hyperscalers, and global infrastructure: the AI race is on. Don’t miss this must-listen BG2.

Array

(

[content] =>

[params] => Array

(

[0] => /forum/threads/openai-future-of-compute-and-the-american-dream-with-jensen-huang-ceo-nvidia.23728/

)

[addOns] => Array

(

[DL6/MLTP] => 13

[Hampel/TimeZoneDebug] => 1000070

[SV/ChangePostDate] => 2010200

[SemiWiki/Newsletter] => 1000010

[SemiWiki/WPMenu] => 1000010

[SemiWiki/XPressExtend] => 1000010

[ThemeHouse/XLink] => 1000970

[ThemeHouse/XPress] => 1010570

[XF] => 2030770

[XFI] => 1060170

)

[wordpress] => /var/www/html

)

Guests have limited access.

Join our community today!

Join our community today!

You are currently viewing SemiWiki as a guest which gives you limited access to the site. To view blog comments and experience other SemiWiki features you must be a registered member. Registration is fast, simple, and absolutely free so please, join our community today!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

OpenAI, Future of Compute, and the American Dream with Jensen Huang CEO Nvidia

- Thread starter Daniel Nenni

- Start date

Hard not to be a Jensen Fan Boy after watching this. Puts a whole new perspective on the AI Bubble.

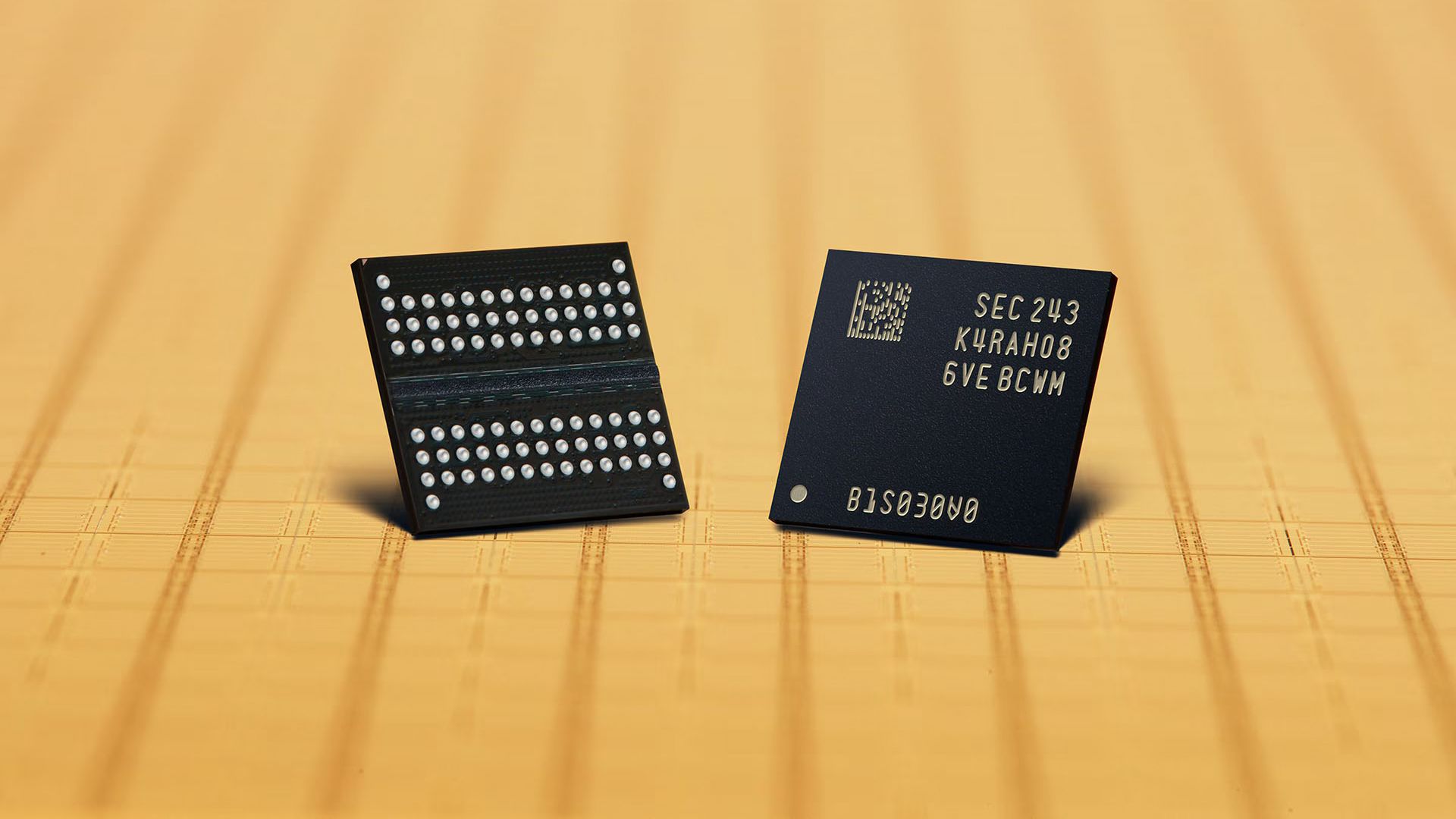

OpenAI's Stargate project to consume up to 40% of global DRAM output — inks deal with Samsung and SK hynix to the tune of up to 900,000 wafers per month

Working at scale.

I think I need a bigger abacus

He gotta do what he gotta do to get 'em breads.Hard not to be a Jensen Fan Boy after watching this. Puts a whole new perspective on the AI Bubble.

I watched his speech when he visited Taiwan. He praised this and that in Taiwan, and then he flew to China and praised this and that in China.

商人無國界 or 商人無祖國 (a businessman has no homeland) - fit Jensen very well

Jensen is a CEO and business man. He gotta do what he gotta do.

A few standouts for me:

* Moving general purpose computation to accelerators vs CPUs - SQL/Snowflake/Databricks and associated processing on NVIDIA ?

* Yearly new chip/hardware generations is a huge differentiator. They use AI to speed.

* Extreme co-design - chip/software/rack/system/datacenter all developed concurrently

* ASIC vs CPU vs GPU -

- Rubin CPX (long context processing, diffusion video generation accelerator) is precursor for other application specific specialized accelerators.

- Maybe a data processing app specific chip/subsystem next

- Transformer architecture still changing rapidly - programmability still required.

- Only real system-level AI chip competition is Google/TPU

- ASICs only useful for mid-volume - too much gross margin given up for middleman. Smart NICs, and Transcoders are good candidates for ASICs. Not a good option for fundamental compute engine for AI, where underlying algorithms are changing regularly.

- Data centers / AI factories are a soup of ASICs and other chips - need to be orchestrated and co-developed with supply chain.

- NVIDIA targeting lowest Total Cost of Ownership at data center level. Someone could offer ASIC chips at zero $$ and still be less economical. Tokens per gig and tokens per watt are compelling.

* NVLink Fusion and Dynamo leading the way in creating next-gen open AI solutions and associated ecosystem.

* Not just a chip company. The AI infrastructure company.

* Moving general purpose computation to accelerators vs CPUs - SQL/Snowflake/Databricks and associated processing on NVIDIA ?

* Yearly new chip/hardware generations is a huge differentiator. They use AI to speed.

* Extreme co-design - chip/software/rack/system/datacenter all developed concurrently

* ASIC vs CPU vs GPU -

- Rubin CPX (long context processing, diffusion video generation accelerator) is precursor for other application specific specialized accelerators.

- Maybe a data processing app specific chip/subsystem next

- Transformer architecture still changing rapidly - programmability still required.

- Only real system-level AI chip competition is Google/TPU

- ASICs only useful for mid-volume - too much gross margin given up for middleman. Smart NICs, and Transcoders are good candidates for ASICs. Not a good option for fundamental compute engine for AI, where underlying algorithms are changing regularly.

- Data centers / AI factories are a soup of ASICs and other chips - need to be orchestrated and co-developed with supply chain.

- NVIDIA targeting lowest Total Cost of Ownership at data center level. Someone could offer ASIC chips at zero $$ and still be less economical. Tokens per gig and tokens per watt are compelling.

* NVLink Fusion and Dynamo leading the way in creating next-gen open AI solutions and associated ecosystem.

* Not just a chip company. The AI infrastructure company.

good summary. Biggest take away is the system level co-design part, that makes their performance/watt highest, and whoever uses their system the highest margin on the same watt, and perhaps more importantly significantly higher revenue opportunities. This is absolutely NV's key moat, and Cuda is only part of this.A few standouts for me:

* Moving general purpose computation to accelerators vs CPUs - SQL/Snowflake/Databricks and associated processing on NVIDIA ?

* Yearly new chip/hardware generations is a huge differentiator. They use AI to speed.

* Extreme co-design - chip/software/rack/system/datacenter all developed concurrently

* ASIC vs CPU vs GPU -

- Rubin CPX (long context processing, diffusion video generation accelerator) is precursor for other application specific specialized accelerators.

- Maybe a data processing app specific chip/subsystem next

- Transformer architecture still changing rapidly - programmability still required.

- Only real system-level AI chip competition is Google/TPU

- ASICs only useful for mid-volume - too much gross margin given up for middleman. Smart NICs, and Transcoders are good candidates for ASICs. Not a good option for fundamental compute engine for AI, where underlying algorithms are changing regularly.

- Data centers / AI factories are a soup of ASICs and other chips - need to be orchestrated and co-developed with supply chain.

- NVIDIA targeting lowest Total Cost of Ownership at data center level. Someone could offer ASIC chips at zero $$ and still be less economical. Tokens per gig and tokens per watt are compelling.

* NVLink Fusion and Dynamo leading the way in creating next-gen open AI solutions and associated ecosystem.

* Not just a chip company. The AI infrastructure company.

AMD should seriously consider acquiring companies like Marvell asap to build the same vertical AI infrastructure capability

hist78

Well-known member

While Jensen is without a doubt a brilliant mind, to me, a lot more is desired from his take on China competition as well as immigration (he mistake the new H1B $100K app fee as part of countering illegal immigration)

The two hosts obviously tried hard to pull Jensen back by asking several follow-up questions to soften his BS response about the $100,000 H1B visa fee question.

Jensen also got the facts wrong about decoupling. It was initiated by both Chairman Xi and Trump during Trump’s first term. Biden continued most of those strategies and policies from the first Trump administration. But Jensen Huang, eager to please Trump, made it sound as if it were a mistake started by Biden.

Exactly - all the data center chip companies are moving in the direction of full system-level co-optimization because they're seeing the same economic imperative. But NVIDIA has arrived there first (or maybe tied with Google, but more general purpose).Biggest take away is the system level co-design part, that makes their performance/watt highest, and whoever uses their system the highest margin on the same watt, and perhaps more importantly significantly higher revenue opportunities. This is absolutely NV's key moat, and Cuda is only part of this.

Though this was an interesting display of the challenge for Amazon trying to optimize their data centers using their more general purpose data center architecture and networking. I think in long term that general purpose data center. approach is going to limit them, even with their own custom chips. Too much legacy.good summary. Biggest take away is the system level co-design part, that makes their performance/watt highest, and whoever uses their system the highest margin on the same watt, and perhaps more importantly significantly higher revenue opportunities. This is absolutely NV's key moat, and Cuda is only part of this.