Array

(

[content] =>

[params] => Array

(

[0] => /forum/threads/tachyum-unveils-2nm-prodigy-with-21x-higher-ai-rack-performance-than-the-nvidia-rubin-ultra.24007/page-2

)

[addOns] => Array

(

[DL6/MLTP] => 13

[Hampel/TimeZoneDebug] => 1000070

[SV/ChangePostDate] => 2010200

[SemiWiki/Newsletter] => 1000010

[SemiWiki/WPMenu] => 1000010

[SemiWiki/XPressExtend] => 1000010

[ThemeHouse/XLink] => 1000970

[ThemeHouse/XPress] => 1010570

[XF] => 2030770

[XFI] => 1060170

)

[wordpress] => /var/www/html

)

Guests have limited access.

Join our community today!

Join our community today!

You are currently viewing SemiWiki as a guest which gives you limited access to the site. To view blog comments and experience other SemiWiki features you must be a registered member. Registration is fast, simple, and absolutely free so please, join our community today!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Tachyum Unveils 2nm Prodigy with 21x Higher AI Rack Performance than the Nvidia Rubin Ultra

- Thread starter Daniel Nenni

- Start date

yep,Tachyum is a meme they always bump the specifications everytime

That's what it reads like. The most successful instruction set emulator in software I'm aware of is Apple's Rosetta (now Rosetta 2) for running X86 code on its M-series processors.The data sheet said it runs binaries for x86, Arm and RISC-V in addition to Native ISA. Could Prodigy be something like Transmeta from many years ago?

Your post made me go back and read about Transmeta... I forgot it was yet another VLIW design that lost to superscalar architecture. Transmeta's lead founder, Dave Ditzel, worked at Intel for a while, apparently trying to do a follow-on generation to the Transmeta design. Apparently nothing came of it.

the idea of using firmware to direct traffic to appropriate HW lives on to many other applications ...That's what it reads like. The most successful instruction set emulator in software I'm aware of is Apple's Rosetta (now Rosetta 2) for running X86 code on its M-series processors.

Your post made me go back and read about Transmeta... I forgot it was yet another VLIW design that lost to superscalar architecture. Transmeta's lead founder, Dave Ditzel, worked at Intel for a while, apparently trying to do a follow-on generation to the Transmeta design. Apparently nothing came of it.

In my view, any company serious about doing LLM acceleration hardware has to be talking about circuit improvements through architectural innovation and optimization for full-stack attention / transformer-based inference - not “we make faster, parallel universal processor that now does FP4.

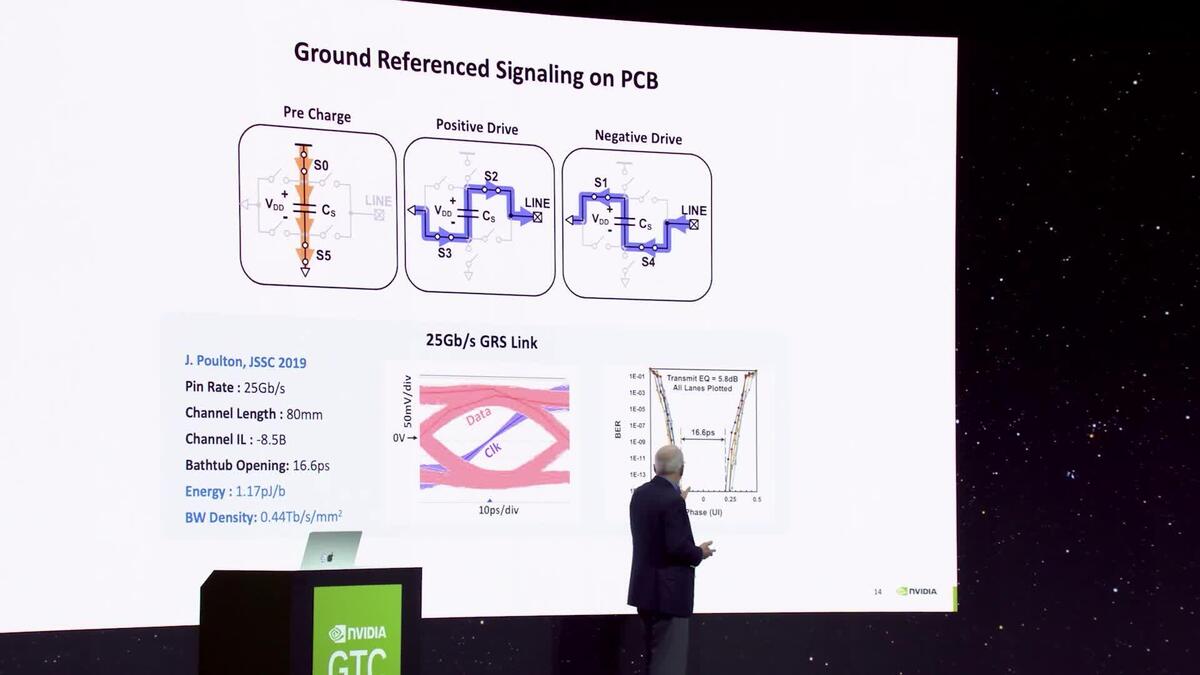

Thought this talk was eye-opening on the kinds of hardware / software challenges that are the bottlenecks today along with possible solutions.

Thought this talk was eye-opening on the kinds of hardware / software challenges that are the bottlenecks today along with possible solutions.

Insights From NVIDIA Research S73202 | GTC 2025 | NVIDIA On-Demand

The talk will give some highlights from NVIDIA Research for the past year. Detailed topics will be disclosed closer to the event.

www.nvidia.com

Probably in reaction to Your point:The weak point of AI hardware is supplying enough memory bandwidth to feed the cores and it seems like they aren't using HBM memory. How can it beat Nvidia without HBM?

“The TDIMM is key in reducing the cost of AI systems trained on all the knowledge from $8 trillion and 276 gigawatts to $78 billion and 1 gigawatt in 2028,” said Dr. Radoslav Danilak, founder and CEO of Tachyum. “The TDIMM ushers in the era of affordable AI trained on all written knowledge produced by humanity, accessible to many companies and nations.”

Minor changes to DDR6 controller, PHY, and MRDIMM chips will double bandwidth from 6.7 TB/s to 13.5 TB/s in 2027, exceeding Nvidia Rubin’s 13 TB/s. The TAI reduces bandwidth up to 4x, making TAI inference like with 54 TB/s of bandwidth. Evolutionary changes in 2028 would double TDIMM based AI chips bandwidth to 27 TB/s.

The TDIMM power consumption is expected to be 30% higher for 2x bandwidth. Using newer DRAM chips will put TDIMM power consumption at about the same level as older DDR5 RDIMM.

Tachyum Open Sources 281GB/s TDIMM™ for the Future of AI and Computing | Tachyum

Tachyum® today announced details about how its TDIMM™ is bringing the future of AI and computing at a fraction of the cost.

So not just a processor that hasn’t been built yet, but also a memory “standard” that is still only in EDA and CAD diagrams ? But I guess with an HQ in Las Vegas, they are going for the extreme long-odds gamblers ?

Tachyum Open Sources 281GB/s TDIMM™ for the Future of AI and Computing | Tachyum

Tachyum® today announced details about how its TDIMM™ is bringing the future of AI and computing at a fraction of the cost.www.tachyum.com