Despite that skepticism, if you comb through the 53 page paper, there are all kinds of clever optimizations and approaches that DeepSeek has taken to make the V3 model, and these, we do believe that they do cut down on inefficiencies and boost the training and inference performance on the iron DeepSeek has to play with.

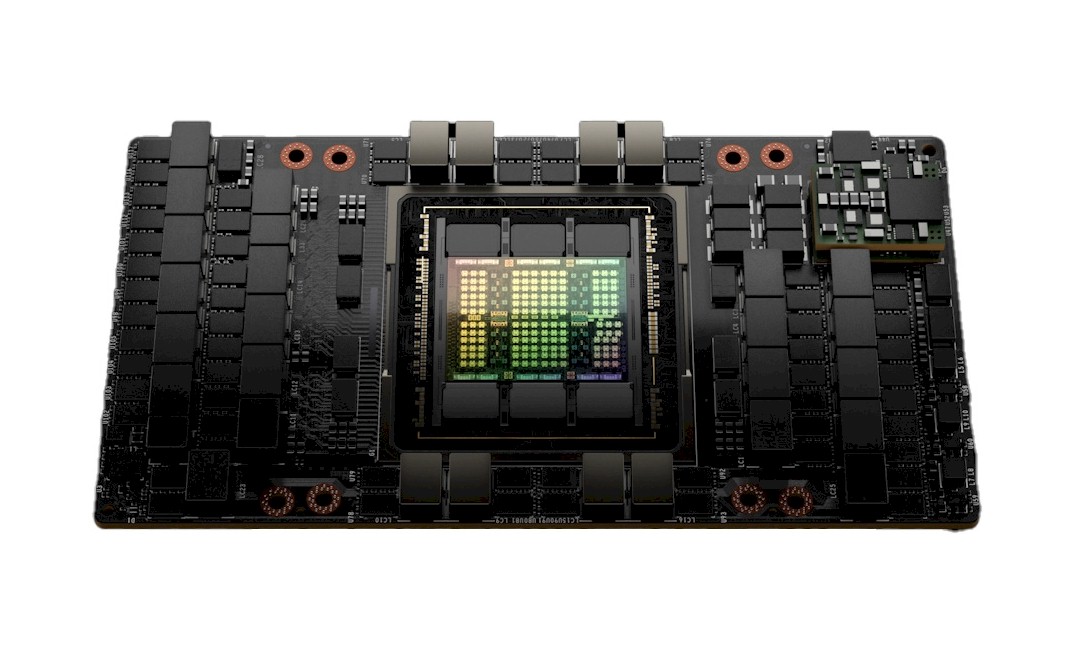

The key innovation in the approach taken to train the V3 foundation model, we think, is the use of 20 of the 132 streaming multiprocessors (SMs) on the Hopper GPU to work, for lack of better words, as a communication accelerator and scheduler for data as it passes around a cluster as the training run chews through the tokens and generates the weights for the model from the parameter depths set. As far as we can surmise, this “the overlap between computation and communication to hide the communication latency during computation,” as the V3 paper puts it, uses SMs to create what is in effect an L3 cache controller and a data aggregator between the GPUs not in the same nodes.

As the paper puts it, this communication accelerator, which is called DualPipe, has the following tasks:

- Forwarding data between the InfiniBand and NVLink domain while aggregating InfiniBand traffic destined for multiple GPUs within the same node from a single GPU.

- Transporting data between RDMA buffers (registered GPU memory regions) and input/output buffers.

- Executing reduce operations for all-to-all combine.

- Managing fine-grained memory layout during chunked data transferring to multiple experts across the InfiniBand and NVLink domain.

In another sense, then, DeepSeek has created its own on-GPU virtual DPU for doing all kinds of SHARP-like processing associated with all-to-all communication in the GPU cluster.

Here is an important paragraph about DualPipe:

“As for the training framework, we design the DualPipe algorithm for efficient pipeline parallelism, which has fewer pipeline bubbles and hides most of the communication during training through computation-communication overlap. This overlap ensures that, as the model further scales up, as long as we maintain a constant computation-to-communication ratio, we can still employ fine-grained experts across nodes while achieving a near-zero all-to-all communication overhead. In addition, we also develop efficient cross-node all-to-all communication kernels to fully utilize InfiniBand and NVLink bandwidths. Furthermore, we meticulously optimize the memory footprint, making it possible to train DeepSeek-V3 without using costly tensor parallelism. Combining these efforts, we achieve high training efficiency.”

The paper does not say how much of a boost this DualPipe feature offers, but if a GPU is waiting for data 75 percent of the time because of the inefficiency of communication, reducing that compute delay by hiding latency and scheduling tricks like L3 caches do for CPU and GPU cores, then maybe, if DeepSeek can push that computational efficiency to near 100 percent on those 2,048 GPUs, the cluster would start acting it had 8,192 GPUs (with some of the SMs missing, of course) that were not running as efficiently because they did not have DualPipe. OpenAI’s GPT-4 foundation model was trained on 8,000 of Nvidia’s “Ampere” A100 GPUs, which is like 4,000 H100s (sort of). We are not saying this is the ratio DeepSeek attained, we are just saying this is how you might think about it.