It’s been a while now since OpenAI showcased its super-smart, all-singing, all dancing GPT-4o AI, with its spookily human-like speech patterns. Though the company has released a number of new tools, like search, and smaller AI updates, like “reasoning,” the long-rumored next generation GPT-5 AI hasn’t arrived. Rumors recently emerged that the company was working on a big GPT release codenamed Orion, but OpenAI pushed back against that, denying any such software was due this year. Now, thanks to data that’s reached the industry news site The Information, we may know why there’s no GPT-5 yet: OpenAI is finding the rate of improvement of its AI models is slowing down as the tech evolves.

Array

(

[content] =>

[params] => Array

(

[0] => /forum/threads/why-openai-is-trying-new-strategies-to-deal-with-an-ai-development-slowdown.21444/

)

[addOns] => Array

(

[DL6/MLTP] => 13

[Hampel/TimeZoneDebug] => 1000070

[SV/ChangePostDate] => 2010200

[SemiWiki/Newsletter] => 1000010

[SemiWiki/WPMenu] => 1000010

[SemiWiki/XPressExtend] => 1000010

[ThemeHouse/XLink] => 1000970

[ThemeHouse/XPress] => 1010570

[XF] => 2021770

[XFI] => 1050270

)

[wordpress] => /var/www/html

)

Guests have limited access.

Join our community today!

Join our community today!

You are currently viewing SemiWiki as a guest which gives you limited access to the site. To view blog comments and experience other SemiWiki features you must be a registered member. Registration is fast, simple, and absolutely free so please, join our community today!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Why OpenAI Is Trying New Strategies to Deal With an AI Development Slowdown

- Thread starter XYang2023

- Start date

What if AI doesn’t just keep getting better forever?

New reports highlight fears of diminishing returns for traditional LLM training.

arstechnica.com

arstechnica.com

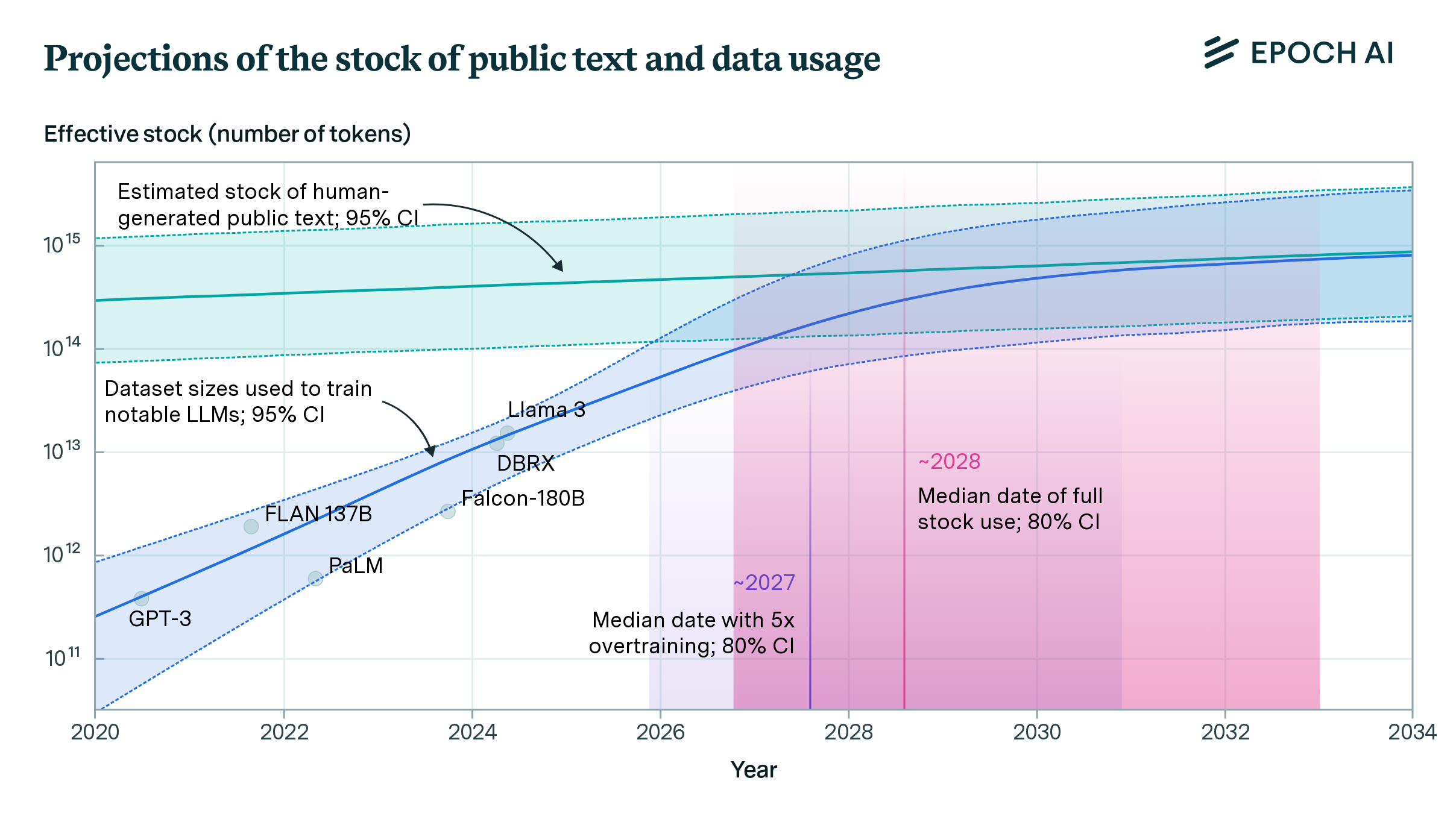

Will We Run Out of Data? Limits of LLM Scaling Based on Human-Generated Data

We estimate the stock of human-generated public text at around 300 trillion tokens. If trends continue, language models will fully utilize this stock between 2026 and 2032, or even earlier if intensely overtrained.

md yasir bashir

New member

It looks like OpenAI’s running into the law of diminishing returns, where making huge improvements is just getting harder and harder as the tech gets more advanced. Guess we’ll have to be patient and see how it all plays out

My guess is that it might have some implications for the training market. People are saying that we now have inference scaling laws. My understanding of inference time scaling laws is that System 2 is sometimes better than System 1. However, with System 2, there isn't necessarily a high demand for GPUs, as that part operates differently, with more symbolic processing. Based on my recent reading, improving reasoning performance doesn’t heavily depend on GPUs. However, a realistic assessment is that I don’t know, and I’m interested to find out what the emerging solutions will be.It looks like OpenAI’s running into the law of diminishing returns, where making huge improvements is just getting harder and harder as the tech gets more advanced. Guess we’ll have to be patient and see how it all plays out

It looks like OpenAI’s running into the law of diminishing returns, where making huge improvements is just getting harder and harder as the tech gets more advanced. Guess we’ll have to be patient and see how it all plays out

I'm waiting to see the OpenAI revenue streams. They certainly know how to raise money but show me the revenue money. Show me the ROI on the big AI infrastructure builds. Elon Musk and his xAI is coming in hot via the White House!

md yasir bashir

New member

It’ll be interesting to see if emerging solutions, like more symbolic AI or hybrid models combining neural networks with rule-based reasoning, can lead to breakthroughs without relying as heavily on GPUs

NicholasBarnes

New member

The experience with the undress AI on Topaiinfluencers was truly impressive. Thanks to undress AI, the results are incredibly accurate and realistic, offering a level of detail that's hard to match. The app is straightforward, easy to navigate, and the results are delivered in just a few steps. It’s a fantastic tool for anyone interested in AI-based photo manipulation. Highly recommend giving it a try if you’re curious about this innovative technology!

Last edited: