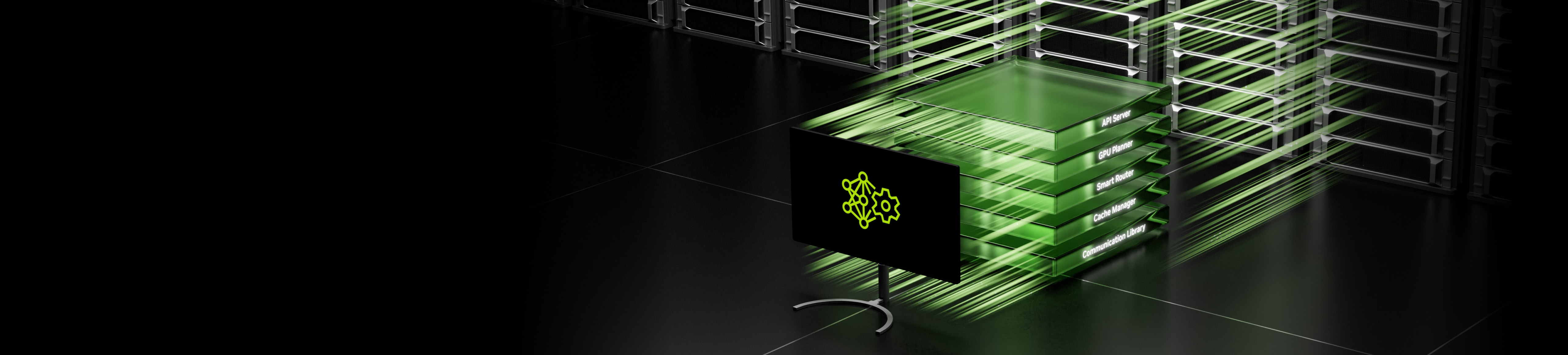

Nvidia's CUDA core processors are designed to take advantage of parallel processing to speed up compute-intensive applications such as AI, 3D rendering, gaming simulation and more. (Nvidia)

Nvidia is arguably the top contender when it comes to high-performance computing, gaming and AI processing, far surpassing AMD and Intel for the performance crown. But what sets Nvidia's chips apart from other manufacturers that others haven't been able to replicate? That success lies with parallel processing – a process that's used to increase the computational speed of computer systems by performing multiple data-processing operations simultaneously.

Unlike CPUs (Central Processing Units) that have multiple cores that process tasks sequentially, GPUs (Graphics Processing Units) can utilize thousands of cores to handle multiple tasks at the same time. That architectural advantage provides the leverage needed to handle today's AI algorithms, which require massive amounts of data processing. To put that into perspective, imagine one person trying to build a skyscraper versus thousands working together.

Enter CUDA

Looking at AI looming on the horizon, Nvidia saw a need for a robust software environment that could benefit from the company's powerful hardware, and CUDA was the result. The programming language, first introduced in 2006, allows developers to take advantage of parallel processing capabilities for demanding AI applications. (CUDA stands for Compute Unified Device Architecture.)Nvidia's move not only opened the door to new possibilities but also laid the groundwork for a CUDA ecosystem, ushering the company to the top of the GPU food chain. Its flagship AI GPUs, combined with the company's CUDA software, led to such a head start on the competition that switching to an alternative now seems almost unthinkable for many large organizations. So, what does CUDA have to offer? Here’s a look at some core features:

- Massive Parallelism: The CUDA architecture is designed to leverage thousands of CUDA cores, allowing the execution of many threads, making it ideal for tasks such as image rendering, scientific calculations, machine learning, computer vision, big data processing and more. CUDA core processors are hardware that act as a small processing unit within an Nvidia GPU and function as a mini-CPU to handle thousands of threads simultaneously.

- Hierarchical Thread Organization: CUDA organizes threads into blocks and grids, making it easier to manage and optimize parallel execution and processing, allowing developers to take advantage of hardware resources.

- Dynamic Parallelism: This allows kernels (functions executed on the GPU) to launch additional kernels to enable more flexible and dynamic programming models and simplify code for recursive algorithms or adaptive workloads.

- Unified Memory: Nvidia's unified memory facilitates the sharing of information between the GPU and CPU, simplifying memory management and enhancing performance by migrating to the appropriate memory space.

- Shared Memory: Each block of threads has access to shared memory, allowing for faster data exchanges among threads compared to global memory (logical space), which improves performance.

- Optimized Libraries: The CUDA software comes with a suite of optimized libraries to increase performance, including cuBLAS for linear algebra, cuDNN for deep learning, Thrust for parallel algorithms and more.

- Error Handling/Compiler Support: CUDA offers built-in error-handling capabilities that diagnose issues during the development phase to improve efficiency. It also features compiler support for developers to create code using familiar syntax, making it easy to inject GPU computing into existing applications.

CUDA Applications

Since its introduction in 2006, CUDA has been widely deployed for thousands of applications and research papers and is supported by a base of over 500 million GPUs found in PCs, notebooks, laptops, workstations, data centers and even supercomputers. CUDA cores have been tapped for astronomy, biology, chemistry, physics, data mining, manufacturing, finance, and other computationally intense fields; however, AI has quickly taken the application crown.Nvidia's CUDA cores are indispensable for training and deploying neural networks and deep learning models, taking advantage of their parallel processing capabilities. To put that into perspective, a dozen Nvidia H100 GPUs can provide the same deep learning equivalent as 2,000 midrange CPUs. That enhanced performance is ideal for complex tasks such as image and speech recognition. Natural Language Processing (NLP) and Large Language Models (LLMs), such as GPT, also benefit from CUDA core processing, making it easier for developers to deploy sophisticated algorithms or enhance applications like chatbots, translation services, and text analysis.

Deep Genomics' BigRNA can accurately predict diseases based on patients' genetic variations.

Nvidia's CUDA technology has also been tasked with healthcare applications, including facilitating faster and more accurate diagnostics via deep learning algorithms. They drive molecular-scale simulations to visualize organs and help predict the effectiveness of treatments. They're also used to analyze complex data from MRIs and CT scans, improving early detection of diseases. Toronto-based Deep Genomics is utilizing CUDA to power deep learning to better understand how genetic variations can lead to disease and how best to treat them with the discovery of new medicines. Tempus is another medical company that's using Nvidia's GPUs for deep learning to speed the analysis of medical images, and its technology is set to be deployed in GE Healthcare MRI machines to help diagnose heart disease.

CUDA core technology has applications in the finance industry, which uses Nvidia GPUs to process large amounts of transaction data, providing banks with real-time fraud detection and risk management. AI algorithms can analyze complex financial patterns, improving market prediction accuracy and investment strategies. The same is true for stock brokerages, who task AI algorithms to execute orders in milliseconds, optimizing financial returns.

Academia has utilized CUDA technology as well, combining it with OpenCL APIs to develop and optimize AI algorithms for new drug discoveries, making GPUs integral to their studies. Institutions such as Stanford University have been using CUDA technology since its release and have used the platform as a base for learning how to program AI algorithms and deep learning models.

Researchers at Stanford University used CUDA to develop and accelerate the simulation of new QML methods to reduce the qubit count needed to study large data sets.

Researchers at the University of Edinburgh's Quantum Software Lab have also utilized the technology to develop and simulate new QML methods to significantly reduce the qubit count necessary to study large data sets. Using CUDA-Q simulation toolkits and Nvidia GPUs, they were able to overcome scalability issues and simulate complex QML clustering methods on problem sizes up to 25 qubits. The breakthrough is essential for developing quantum-accelerated supercomputing applications.

Retail companies have also jumped on the AI bandwagon, using it to enhance customers' experiences via personalized recommendations and inventory management. Generative AI models take advantage of data science to predict consumer behavior and tailor marketing strategies. Lowe's, for example, uses GPU-accelerated AI for several applications, including supply chain optimization and dynamic pricing models. CUDA technology helps analyze large datasets quickly, which improves demand forecasting and ensures efficient stock replenishment. The company recently partnered with Nvidia to develop computer vision applications, including enhancing self-checkouts to prevent theft or determine if a product was left unintentionally in carts in real-time.

Nvidia’s lead comes back to CUDA

It's easy to see why Nvidia's CUDA technology has propelled the company to the number one spot of high-performance computing by unlocking the full potential of parallel processing with its CUDA architecture. The ability to harness thousands of cores for processing large amounts of data has made the technology a valuable platform for many industries, from healthcare and academia to retail and finance. With its extensive CUDA ecosystem, optimized libraries and hardware innovations, Nvidia has cemented its leadership in the AI boom far above AMD and Intel. As AI applications continue to advance, CUDA looks to remain the gold standard for researchers and developers to push the limits of what's possible.Grace is a Chicago-based engineer.