:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/11447597/acastro_180529_1777_nvidia_0002.0.jpg)

Illustration by Alex Castro / The Verge

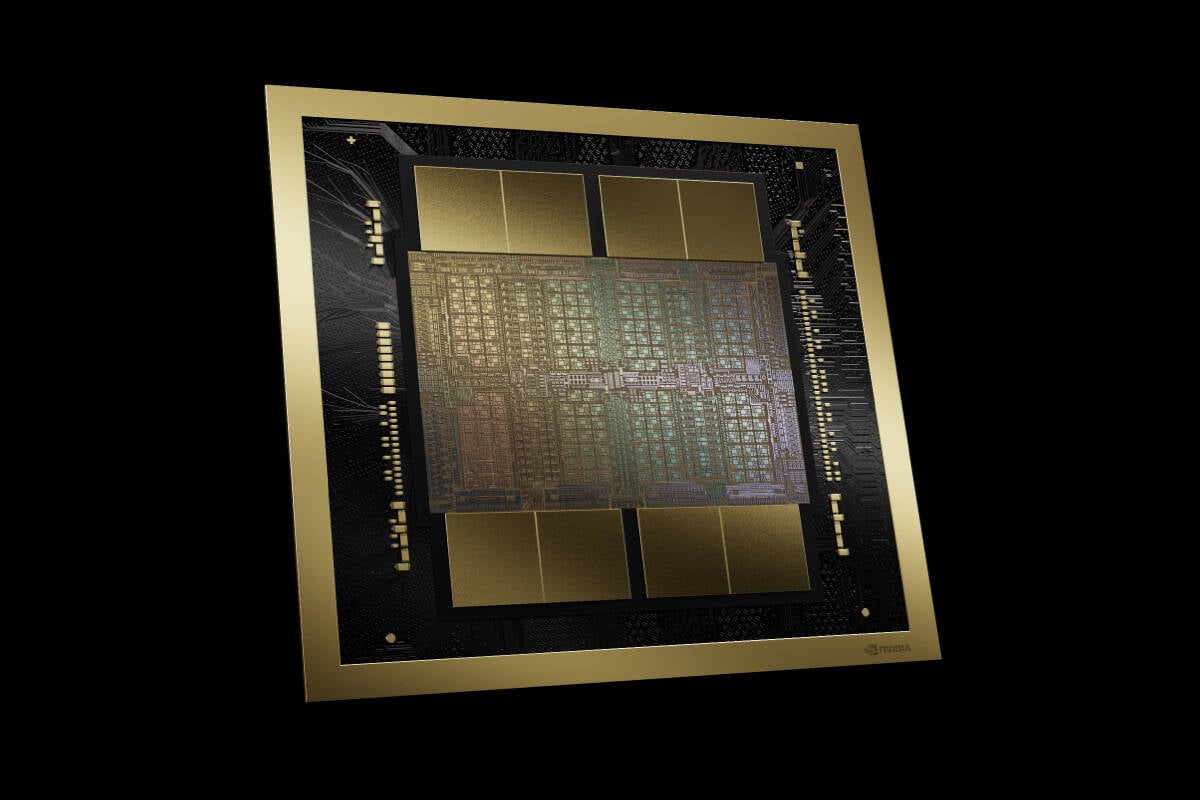

Nvidia has reportedly told Microsoft and at least one other cloud provider that its “Blackwell” B200 AI chips will take at least three months longer to produce than was planned, according to The Information. The delay is the result of a design flaw discovered “unusually late in the production process,” according to two unnamed sources, including a Microsoft employee, cited by the outlet.

B200 chips are the follow-up to the supremely popular and hard-to-get H100 chips that power vast swaths of the artificial intelligence cloud landscape (and helped make Nvidia one of the most valuable companies in the world). Nvidia expects production of the chip “to ramp in 2H,” according to a statement that Nvidia spokesperson John Rizzo shared with The Verge. “Beyond that, we don’t comment on rumors.”

Nvidia is now reportedly working through a fresh set of test runs with chip producer Taiwan Semiconductor Manufacturing Company, and won’t ship large numbers of Blackwell chips until the first quarter. The Information writes that Microsoft, Google, and Meta, have ordered “tens of billions of dollars” worth of the chips.

The report comes just months after Nvidia said that “Blackwell-based products will be available from partners” starting in 2024. The new chips are supposed to kick off a new yearly cadence of AI chips from the company as several other tech firms, such as AMD, work to spin up their own AI chip competitors.

Nvidia reportedly delays its next AI chip due to a design flaw

Nvidia’s yearly AI chip refresh may not start this year.