Jensen did a real nice job. The media Q&A was better than the keynote. We have definitely entered the age of AI and Nvidia is positioned perfectly. Here is a transcription from Ian Cutress who typed it in while Jensen spoke! If you are not a subscriber to his newsletter you should take a look:

The latest in semiconductors: silicon, AI, processors, market trends. Click to read More Than Moore, by Dr. Ian Cutress, a Substack publication with thousands of subscribers.

morethanmoore.substack.com

The following is a transcribed Q&A from a 1hr session with the global press. Wording has been cleaned up to ensure readability, and is provided as-is. Due to the timing of the Q&A, paid subscribers will have initial access for an undisclosed time.

Initial Remarks From Jensen

Two transitions in computing in how the computer is made, and from general comp to accel comp. It's about what it can do. Accelerated compute leads to Generative AI, and because of Generative AI, a new instrument has emerged - an AI generator. Some people call it a datacenter. A standard datacenter has files, lots of things. But in Generative AI, it's doing one thing or processing one thing, for one person, for one company, and producing AI - it produces tokens. When you interact with ChatGPT, it's generating tokens - the floating point numbers, which become words, images, or sounds. In the future, those tokens will be proteins, chemicals, computer animated machines, robots. Animating a machine is like speaking, if a computer can speak, why can it not move like a machine? These token generators are a new category, a new industry, and that's why we say AI is an industrial revolution. It's new - these new rooms, buildings, are AI factories. In the last industrial revolution, the input was water and fuel, and the output was electricity. Now we have data input, and the output is tokens. Tokens can be distributed, and are valuable. Datacenters go into the company cost, into operating expense, into capital expenditure. You think of the datacenter as cost. But factories make money - this new world is a Generative AI world, and it has AI factories.

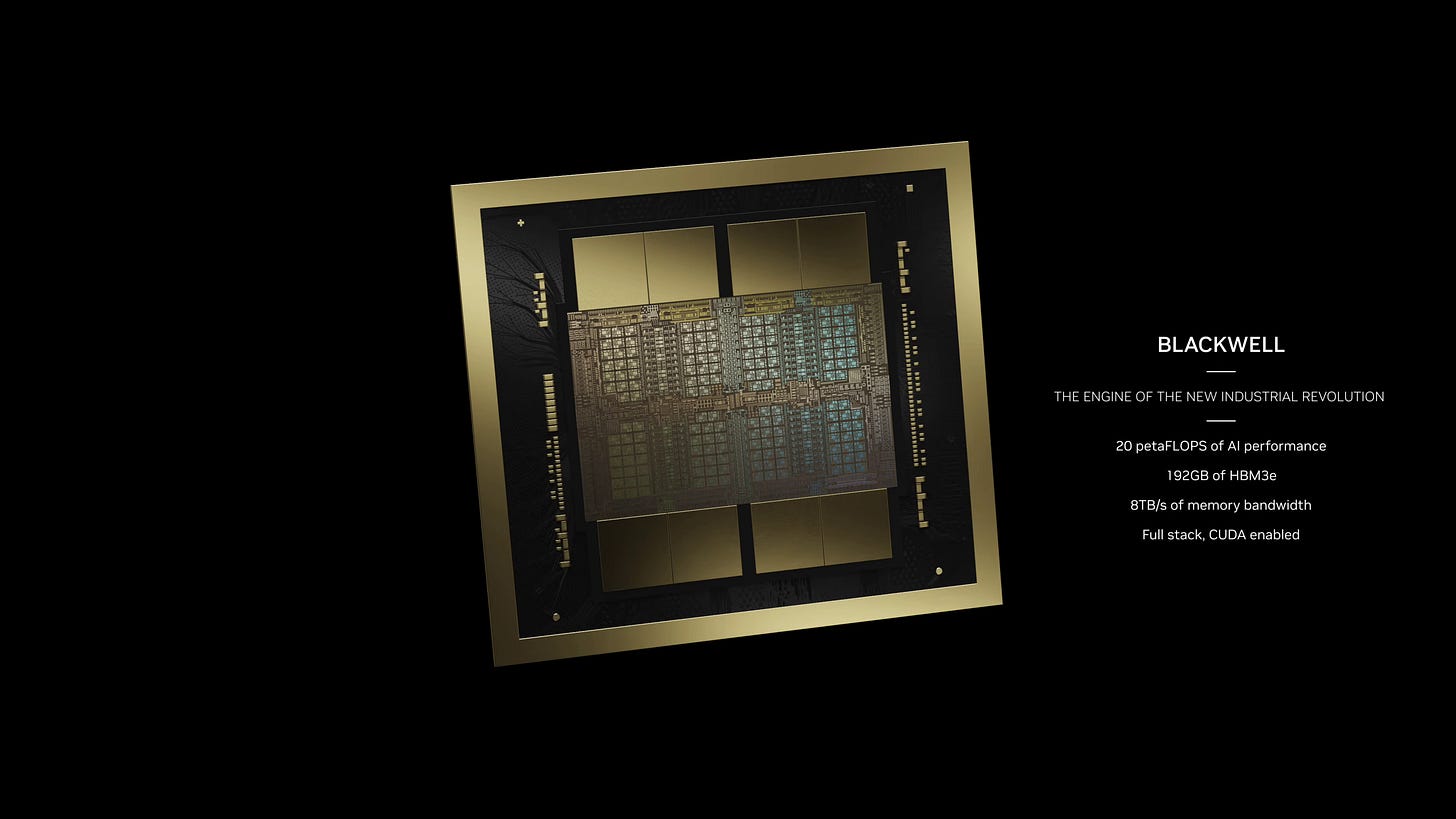

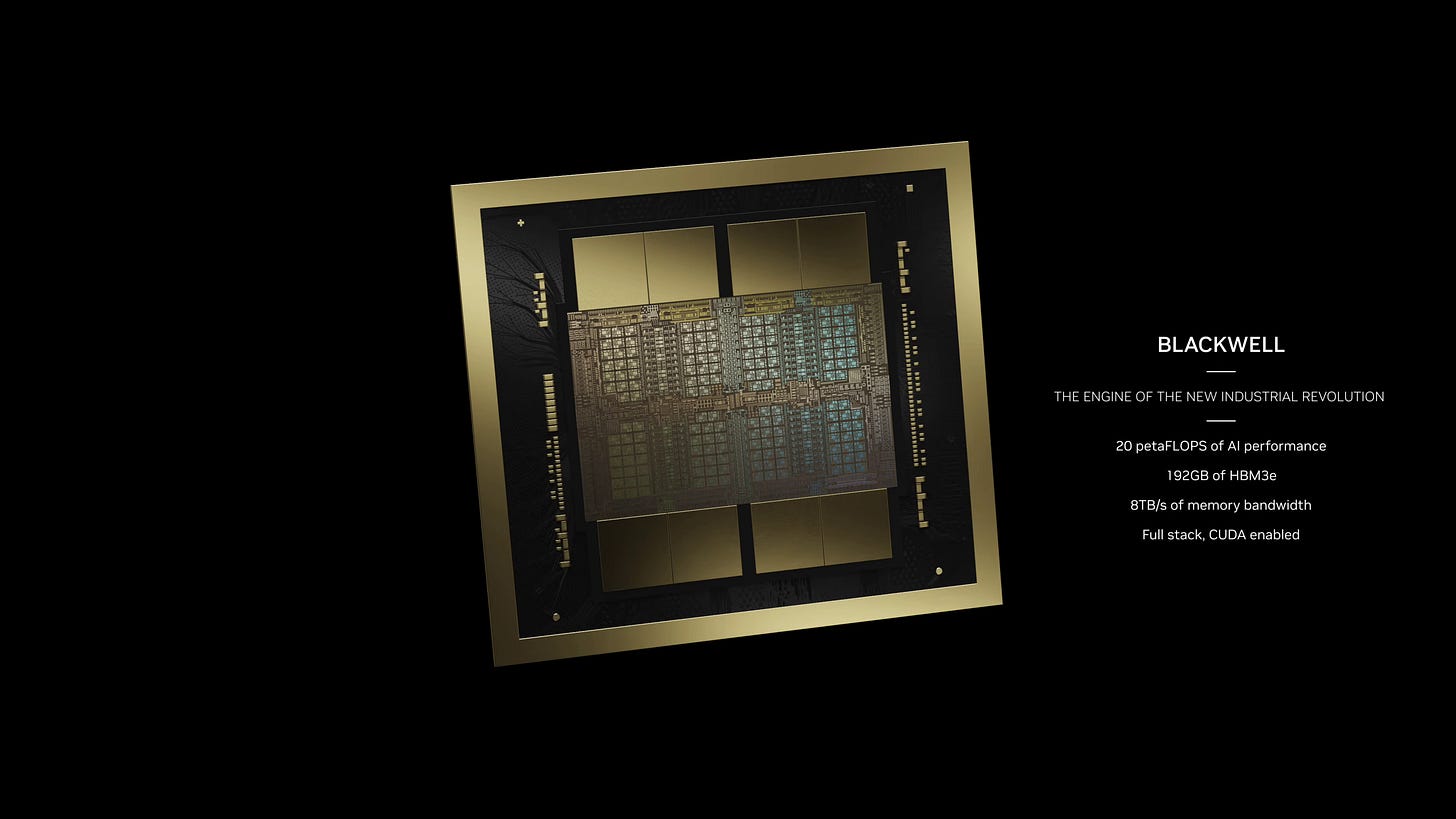

In this new world, software is complicated. Nothing is easy about GPT - the software is very large, and getting larger, and it needs so many different things. Today it learns on words and on images, now it learns on video, with reinforcement learning, with synthetic data, and through debate like AlphaGo. We are training AI to learn in different ways, making it more sophisticated over time. We created a new generation of computing in future - the trillion parameter future, enabled by Blackwell. It's revolutionary - Blackwell is very performant and energy efficient. An example, with training a GPT 1.8T parameter model in 90 days. Instead of 15 MW with H100, it's 4 MW. We reduce the power of doing the work. Efficiency is work divided by input - the work is training, and the input is 4 MW.

A second breakthrough is in generation. This is the first time that people think about AI more than just inference response. Not just inference, like showing an image of a cat and getting the cat output. But inference is now generative. It's not just about recognizing, but generating. Blackwell is designed to be generative, and this is the first time in a datacenter where people are thinking about GPUs in that way. As a gamer, you've always thought about GPUs as a generative engine, generating images and pixels. In the future, images, video, text, protein, molecules, are all going to be generated by a GPU. GPUs went from graphics generation into AI training, AI inference, now AI generation. In the future, most of our experience will be generated. That's why the opportunity is so giant - when you touch a phone, your information was pre-recorded - in the future that will be generated, be unique. When you search, the search will be augmented, using features such as RAG (retrieval augmented generation). The future will all be generated, and that needs an engine. We call it called Blackwell, with its second generation transformer engine, our next generation NVLink to parallelize GPUs.

Third, in this new world, the software is different. It's complex. But the question is how enterprises will use the software. Windows comes in a binary, you download and install it. For software like SAP, IT departments install it for you. But if you want to create your own application with AI, someone has to go and package this complex software with high performance compute inside, make it easy to use, download and use, so you can interact with it. In the future, the software is AI, and you just talk to it. The AI software will be very easy to use, it will be natural. You can just connect many AIs together, called NIMs - AI services. We will help companies to connect them together - off the shelf, customization, and connect it up to other apps. NIMs, NeMo services. We will help customers customize NIMs, and we call that our AI foundry. Nvidia has the technology, the tools, and the infrastructure to do it. Technology, expertise, and infrastructure is what a foundry is. We can help every company build custom AIs. These are customers with platforms - SAP with custom AI. Ansys with custom AI. Cadence with custom AI. Netapp with custom AIs. We can manufacture those AIs for them to take to market.

For the next wave of AI, the AI has to understand the physical world. We saw some revolutionary AI from OpenAI called SORA. When SORA generates videos - it makes sense. The car is on the road, making turns. The person is walking on the street. The AI understands the physical laws - if we take that to the limit, then the AI can behave in the physical world, which is called robotics. The next generation requires new computers, new tools, omniverse and digital twins. We had to develop new foundation models. That stack, our go to market, we go to market as a platform, not a tools maker. Omniverse is our digital twin SDK which we connect to developers. Dassault 3Dexcite with photo realistic rendering, Siemens to Omniverse, Cadence to Omniverse, Rockwell to Omniverse, all using APIs. They can use these APIs to create digital twins. We're happy about the omniverse success to connect to these tools, all supercharged with omniverse.

Blackwell is the name of the chip and the computer system. We have an x86 system that follows our previous versions, called HGX. You can pull out the Hopper tray, and simply push in Blackwell. The production transition and ramp are going to be a lot easier because the infrastructure to support it already exists. We also have DGX, our new architecture for liquid cooling, and we can create large NVLink domains. This allows 8 GPUs in one domain. In Blackwell, it's now 16 dies in that domain. But for larger machines, we have stacked versions of Blackwell and Grace Blackwell, connected with NVLINK switch. Our NVLink Switch is the highest performance switch in the world. 9 of these to connect our Blackwells into. It's very modular, and we slide them into a backplane.

The Future is AI

morethanmoore.substack.com