Arthur Hanson

Well-known member

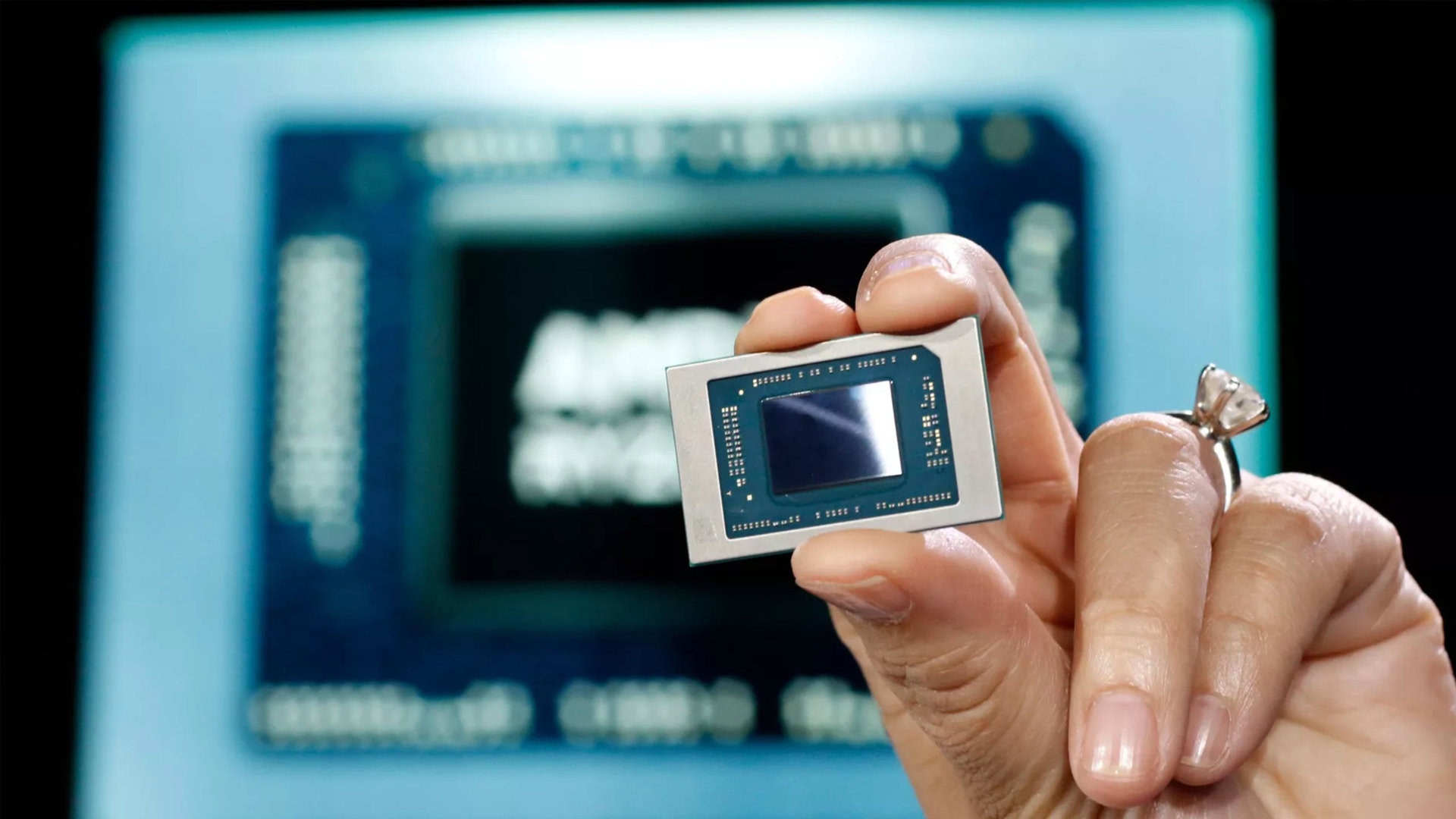

AMD announces Ryzen 7040 series processors with on-chip AI

AMD announces its Ryzen 7040 series processors, finally bringing the 7000 line to laptops. The first products will launch in March.

www.androidauthority.com

AI/ML is about to come on strong with a rapid growth cycle and I feel this will be a game changer not only for the semi-industry, but the beginning of a world changing shift in everything. Combined with massive cheap memory, AI/ML will be a massive game changer in everything and we a just about to see the tip of the spear. I see automation, education and many other fields and areas going through more changes in a generation than has occurred in centuries. This will radically change many professions in the next decade and especially education which in its current form will become totally obsolete from the Einstein rule of never memorize anything you can look up. Combined with AI/ML, this will make much of our current education and training systems totally obsolete by leveraging the best and the brightest massively by being able to recreate themselves in a program that will be in everything from supercomputers to smartphones. Any thoughts or comments sought and appreiciated.