LAS VEGAS, November 12, 2025 –Tachyum® today announced details and specifications for its 2nm Prodigy® Universal Processor, which will enable AI models with parameters many orders of magnitude larger than those of any existing solution at a fraction of the cost.

Prodigy Ultimate provides up to 21.3x higher AI rack performance than Nvidia Rubin Ultra NVL576. Prodigy Premium provides up to 25.8x higher AI rack performance than Vera Rubin 144. Technical details of the 2nm Prodigy, the first ever chip to exceed 1,000 PFLOPs on inference, will be published within a week. Nvidia Rubin delivers 50 PFLOPs.

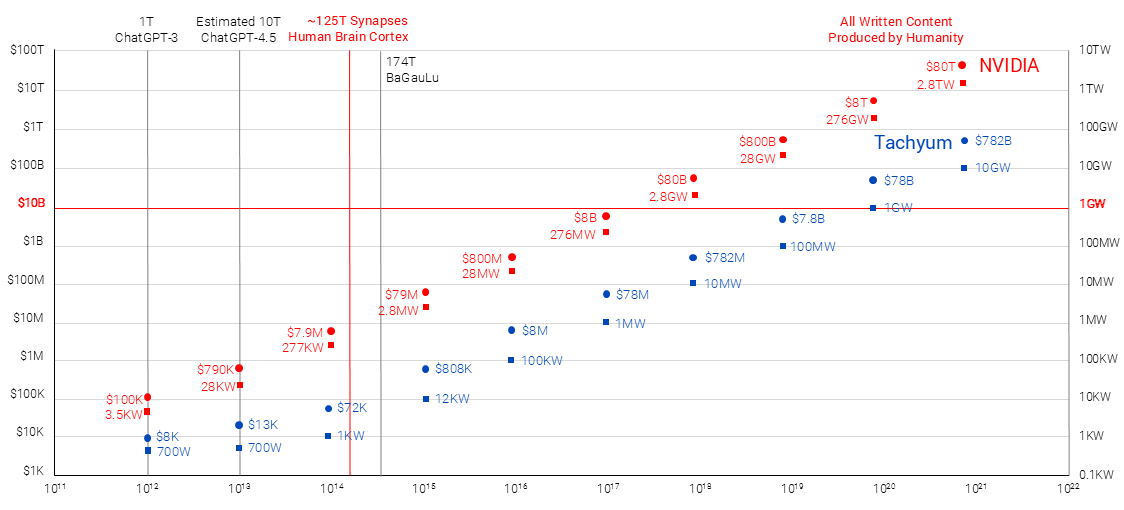

The global competition in AI continues to accelerate, with China and the United States leading the race. Current AI models demonstrate massive computational scales — for instance, ChatGPT 4 features approximately 1.8 trillion parameters, while human brains contain an estimated 150 trillion synapses. Emerging systems such as BaGauLu reach 174 trillion parameters, but the ultimate breakthrough is expected to come from models trained on the collective knowledge of humanity, exceeding 100 000 000 trillion (1020) parameters. Traditional large-scale AI solutions could cost over $8 trillion and require more than 276 gigawatts of power. In contrast, the Tachyum solution is projected to achieve comparable capabilities at an estimated cost of $78 billion and a power requirement of just 1 gigawatt — making it accessible to multiple companies and nations.

In addition to open-sourcing all software, Tachyum is making its memory technology available, using standard components, allowing 10x increase of DIMM-based memory bandwidth available for licensing by memory or processor companies, including JEDEC adoption to achieve high adoption and low cost. In 2023, Tachyum announced licensable Tachyum AI (TAI) data types, and its Tachyum Processing Unit (TPU) core is available for licensing. Tachyum is in the process of making the Instruction Set Architecture (ISA) open.

Tachyum has continually upgraded its Prodigy design to address ever-changing requirements in server, AI and HPC markets with up to 5x integer performance, up to 16x higher AI performance, 8x DRAM bandwidth, 4x chip-to-chip and I/O bandwidth, 4x scalability by supporting 16 sockets, and 2x power efficiency, with lower cost per core.

The Prodigy chip was upgraded to 2nm to significantly reduce power consumption. Reducing chiplet die size improves cost despite expensive 2nm wafers. Each chiplet in the Prodigy package integrates 256 high-performance custom 64-bit cores. The power consumption reduction is critical, as multiple chiplets occupy a single package. Backed by a recent $220 million investment, the 2nm Prodigy is being readied for tape-out.

Multiple Prodigy SKUs cover a wide range of performance and applications, including big AI, exascale supercomputing, HPC, digital currency, cloud/hyperscale, big data analytics, and databases. Prodigy Ultimate integrates 1,024 high-performance cores, 24 DDR5 17.6GT/s memory controllers and 128 PCIE 7.0 lanes. The Prodigy Premium comes with 16 DRAM channels, and 512 to 128 cores scalable to 16 socket systems. Entry-level Prodigy comes with 8 or 4 DRAM controllers and 128 to 32 cores.

Prodigy features, scalability, and price segmentation ensure rapid market penetration. Tachyum provides out-of-the-box native system software, operating systems, compilers, libraries, many applications, and AI infrastructure frameworks. It also allows running unmodified Intel/AMD x86 binaries and mixing them with native applications. This ensures that Tachyum systems can be operational by customers from day one.

“With tape-out funding now secured after a long wait, the world’s first Universal Processor can proceed to production, designed to overcome the inherent limitations of today’s data centers,” said Dr. Radoslav Danilak, founder and CEO of Tachyum. “The distinct markets addressed by Prodigy are the AI, server, and HPC markets, requiring fast and efficient chips. Tachyum’s Prodigy Premium and Ultimate will supercharge workloads with superior performance at a lower cost than any other solution on the market.”

The Prodigy Universal Processor delivers orders of magnitude higher AI performance, 3x the performance of the best x86 processors, and 6x HPC performance of the fastest GPGPU. Eliminating the need for expensive dedicated AI hardware and dramatically increasing server utilization, Prodigy reduces data center CAPEX and OPEX significantly while delivering unprecedented performance, power, and economics.

Those interested in reading the full specifications for Tachyum’s latest Prodigy Universal Processor architecture can download the solutions brief.

Tachyum is transforming the economics of AI, HPC, public and private cloud with the world’s first Universal Processor Prodigy unifying the functionality of a CPU, an HPC GPGPU, and AI accelerators to deliver industry-leading performance, cost and power efficiency. Tachyum has offices in the United States, Slovakia, Taiwan and the Czech Republic. For more information, visit https://www.tachyum.com/.