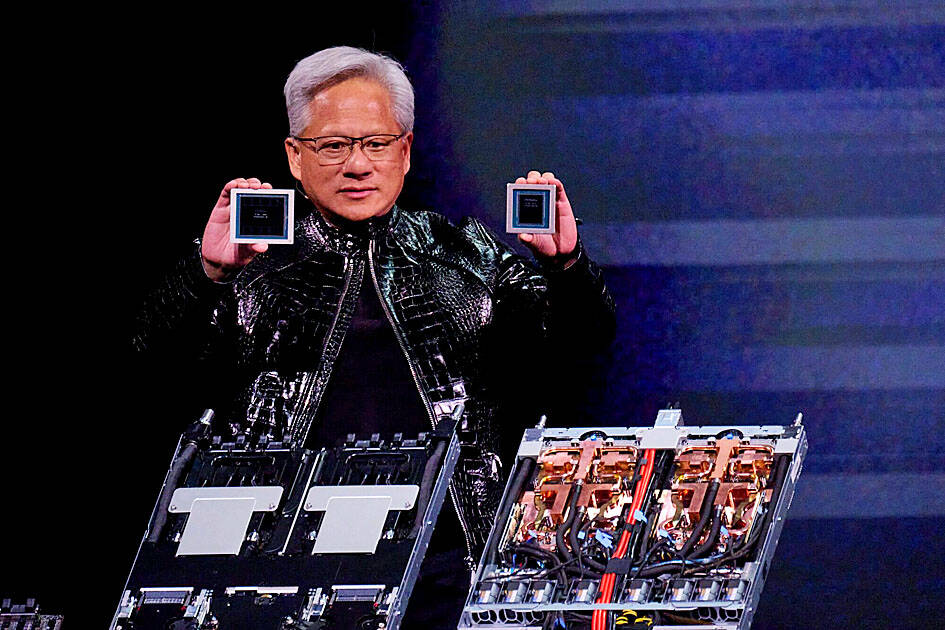

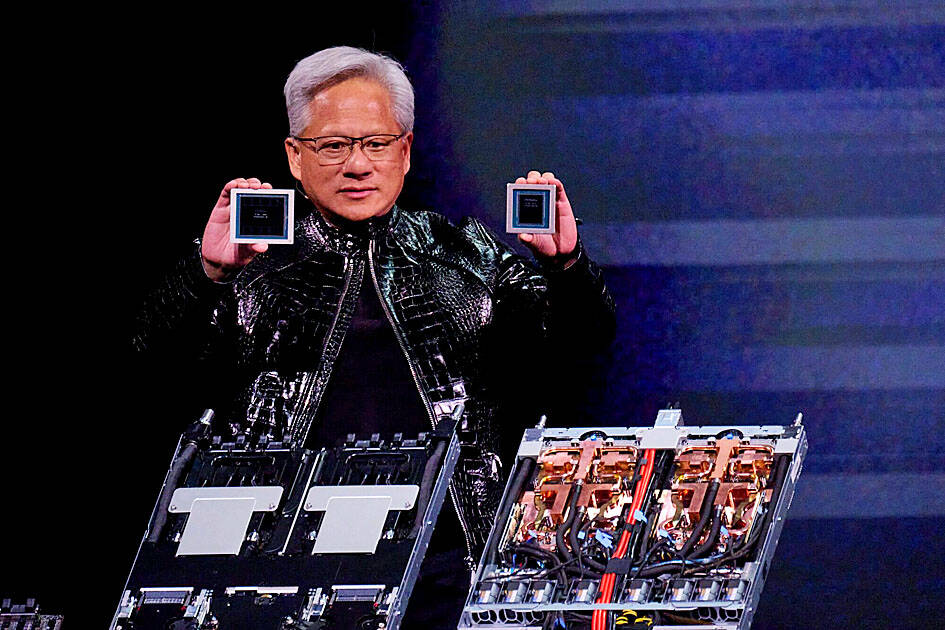

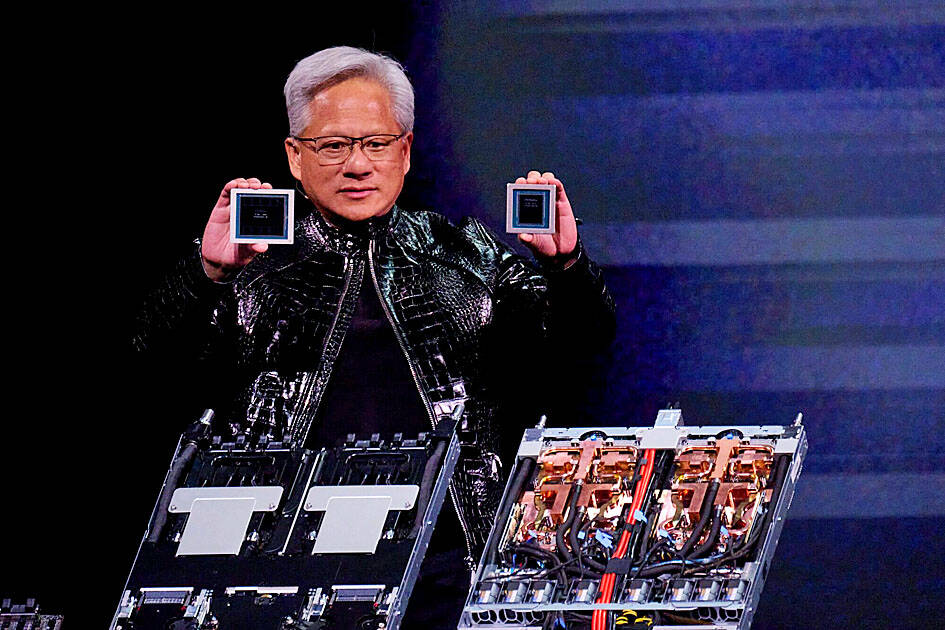

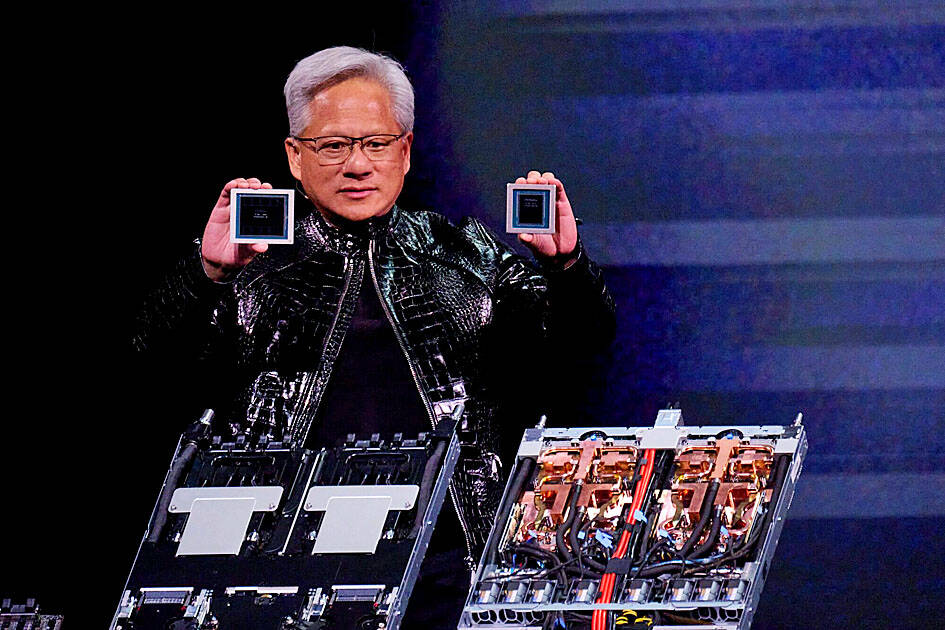

Nvidia Corp chief executive officer Jensen Huang (黃仁勳) on Monday introduced the company’s latest supercomputer platform, featuring six new chips made by Taiwan Semiconductor Manufacturing Co (TSMC, 台積電), saying that it is now “in full production.”

“If Vera Rubin is going to be in time for this year, it must be in production by now, and so, today I can tell you that Vera Rubin is in full production,” Huang said during his keynote speech at CES in Las Vegas.

The rollout of six concurrent chips for Vera Rubin — the company’s next-generation artificial intelligence (AI) computing platform — marks a strategic departure from Nvidia’s previous “one or two chip” cadence, Huang said.

More than one or two new chips need to be developed in a world where AI models are growing 10-fold annually and the tokens generated are increasing five-fold each year, he said.

“It is impossible to keep up with those kinds of rates ... unless we deploy aggressive extreme codesign, innovating across all the chips, across the entire stack, all at the same time,” he said.

The six-chip road map was first teased by Huang during his visit to Taiwan in August last year, when he confirmed the Silicon Valley giant had taped out the new designs at TSMC.

At the time, he described Vera Rubin as “revolutionary,” because all six chips were new.

The suite — comprising the Vera central processing unit, Rubin graphics processing unit (GPU), NVLink 6 switch, ConnectX-9 SuperNIC, BlueField-4 data processing unit and Spectrum-X ethernet switch — succeeds the Blackwell architecture, with the chips primarily manufactured using TSMC’s advanced 3-nanometer process.

Vera Rubin is 3.5 times better at training and five times better at running AI software than its predecessor, Blackwell, Nvidia said.

The new supercomputer slashes inference costs to one-seventh of the Blackwell platform and reduces the GPU count required for training mixture-of-experts models by 75 percent, the company said.

Leading AI labs, cloud service providers and system builders, including Amazon Web Services, Meta Platforms Inc, Google and Microsoft Corp, are expected to be among the first to adopt the new platform, it added.

Separately, Advanced Micro Devices Inc (AMD), aiming to make a dent in Nvidia’s stranglehold on the AI hardware market, on Monday also announced a new chip for corporate data center use and talked up the attributes of a future generation of products for that market.

The company is adding a new model to its lineup — called the MI440X — for use in smaller corporate data centers, at which clients can deploy local hardware, while keeping their data in their own facilities.

The announcement came as part of a keynote at CES, where AMD chief executive officer Lisa Su (蘇姿丰) also touted the firm’s top-of-the-line MI455X, saying systems based on that chip are a leap forward in the capabilities on offer.

The AI surge would continue because of the benefits it is bringing and the heavy computing requirements of that new technology, Su said.

“We don’t have nearly enough compute for what we could possibly do,” she said. “The rate and pace of AI innovation has been incredible over the last few years. We are just getting started.”

OpenAI cofounder and president Greg Brockman joined Su on the CES stage to talk about the two company’s partnership and plans for deployment of OpenAI’s systems.

The two also talked about their shared belief that economic growth would be tied to the availability of AI resources.

www.taipeitimes.com

www.taipeitimes.com

“If Vera Rubin is going to be in time for this year, it must be in production by now, and so, today I can tell you that Vera Rubin is in full production,” Huang said during his keynote speech at CES in Las Vegas.

The rollout of six concurrent chips for Vera Rubin — the company’s next-generation artificial intelligence (AI) computing platform — marks a strategic departure from Nvidia’s previous “one or two chip” cadence, Huang said.

Nvidia chief executive officer Jensen Huang presents the company’s Rubin GPU and Vera CPU at CES in Las Vegas on Monday.

Photo: BloombergMore than one or two new chips need to be developed in a world where AI models are growing 10-fold annually and the tokens generated are increasing five-fold each year, he said.

“It is impossible to keep up with those kinds of rates ... unless we deploy aggressive extreme codesign, innovating across all the chips, across the entire stack, all at the same time,” he said.

The six-chip road map was first teased by Huang during his visit to Taiwan in August last year, when he confirmed the Silicon Valley giant had taped out the new designs at TSMC.

At the time, he described Vera Rubin as “revolutionary,” because all six chips were new.

The suite — comprising the Vera central processing unit, Rubin graphics processing unit (GPU), NVLink 6 switch, ConnectX-9 SuperNIC, BlueField-4 data processing unit and Spectrum-X ethernet switch — succeeds the Blackwell architecture, with the chips primarily manufactured using TSMC’s advanced 3-nanometer process.

Vera Rubin is 3.5 times better at training and five times better at running AI software than its predecessor, Blackwell, Nvidia said.

The new supercomputer slashes inference costs to one-seventh of the Blackwell platform and reduces the GPU count required for training mixture-of-experts models by 75 percent, the company said.

Leading AI labs, cloud service providers and system builders, including Amazon Web Services, Meta Platforms Inc, Google and Microsoft Corp, are expected to be among the first to adopt the new platform, it added.

Separately, Advanced Micro Devices Inc (AMD), aiming to make a dent in Nvidia’s stranglehold on the AI hardware market, on Monday also announced a new chip for corporate data center use and talked up the attributes of a future generation of products for that market.

The company is adding a new model to its lineup — called the MI440X — for use in smaller corporate data centers, at which clients can deploy local hardware, while keeping their data in their own facilities.

The announcement came as part of a keynote at CES, where AMD chief executive officer Lisa Su (蘇姿丰) also touted the firm’s top-of-the-line MI455X, saying systems based on that chip are a leap forward in the capabilities on offer.

The AI surge would continue because of the benefits it is bringing and the heavy computing requirements of that new technology, Su said.

“We don’t have nearly enough compute for what we could possibly do,” she said. “The rate and pace of AI innovation has been incredible over the last few years. We are just getting started.”

OpenAI cofounder and president Greg Brockman joined Su on the CES stage to talk about the two company’s partnership and plans for deployment of OpenAI’s systems.

The two also talked about their shared belief that economic growth would be tied to the availability of AI resources.

Nvidia introduces new AI platform featuring six chips made by TSMC - Taipei Times

Bringing Taiwan to the World and the World to Taiwan