jms_embedded

Active member

I have a question about the impact of wafer size on fab processing. I understand the general idea that larger wafers can improve throughput, but there's some nuances that seem to get glossed over. (hmm I can't seem to make a bulleted list in this post)

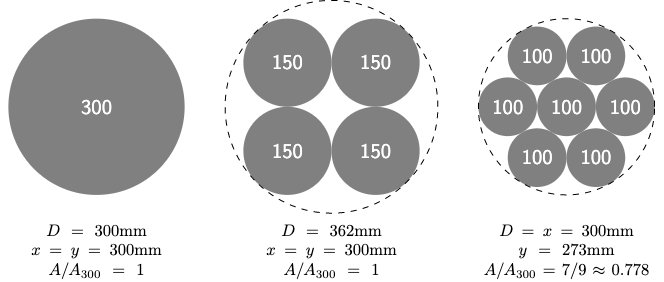

Is the bottleneck to 450mm wafers due more to the tools needing to be redesigned to fit 450mm wafers, or some inherent practical mechanical limitation of the wafer size itself? (weight/thickness/brittleness/flexing of large diameter wafers) Is there a reason we don't have arrays of smaller wafers instead of a single larger wafer? Not an ideal situation in space-constrained tools, but if the issue has to do with something about 450mm wafers that aren't practical due to mechanical issues, it seems like increasing the area that gets processed simultaneously is a slightly different goal than increasing the area of the wafer itself.

- Lithography: area per unit time is constant, so larger area doesn't improve throughput without making the tools faster

- Other steps: Which are constant time (more throughput if you can fit more wafer area in the tool) and which are bounded by area per unit time like lithography?

- Wafer utilization: larger wafers mean that unusable area at the edge will be a smaller fraction of the total area, although when you can fit 5000 die on a 300mm wafer this effect is small, and would have more significant benefit for larger die (> 10mm x 10mm)

Is the bottleneck to 450mm wafers due more to the tools needing to be redesigned to fit 450mm wafers, or some inherent practical mechanical limitation of the wafer size itself? (weight/thickness/brittleness/flexing of large diameter wafers) Is there a reason we don't have arrays of smaller wafers instead of a single larger wafer? Not an ideal situation in space-constrained tools, but if the issue has to do with something about 450mm wafers that aren't practical due to mechanical issues, it seems like increasing the area that gets processed simultaneously is a slightly different goal than increasing the area of the wafer itself.