AI/ML functions are moving to the edge to save power and reduce latency. This enables local processing without the overhead of transmitting large volumes of data over power hungry and slow communication links to servers in the cloud. Of course, the cloud offers high performance and capacity for processing the workloads. Yet, if these workloads can be handled at the edge, albeit with reduced processing power, there is still likely to be a net advantage in power and latency. In the end it boils down to the performance of the edge based AI/ML processor.

As a Codasip white paper points out, embedded devices are typically resource constrained. Without the proper hardware resources, AI/ML at the edge will not be feasible. The white paper titled “Embedded AI on L-Series Cores” states that conventional microcontrollers, even when they have FP and DSP units are hard pressed to run AI/ML. Even with SIMD instructions there is still more required to achieve good results.

Google’s introduction of TensorFlow Lite for Microcontrollers (TFLite-Micro) in 2021 has opened the door for edge-based inference on hardware targeted for IoT, and other low power and small footprint devices. TFlite-Micro uses an interpreter with a static memory planner. Most importantly it also supports vendor specific optimizations. It runs out of the box on just about any embedded platform. With this it delivers operations such as convolution, tensor multiplication, resize and slicing. But the domain-specific optimizations it offers mean that further improvements are possible through embedded processor customization.

Codasip offers configurable application specific processors that can make good use of the TensorFlow Lite-Micro optimization capability. The opportunity for customization arises because each application will have its own neural network and training data. This makes it advantageous to tailor the processor for the particular needs of its specific application.

All of Codasip’s broad spectrum of processors can run TFLite-Micro. The white paper focuses on their L31 embedded core running the well known “MNIST handwritten digits classification” training set and a neural net with two convolutional and pooling layers, at least one fully-connected layer, vectorized nonlinear functions, data resize and normalization operations.

During the early stages of the system design process, Codasip lets designers run Codasip Studio to profile the code to see where things can be improved. In their example ~84% of the time is spent in the image convolution function. Looking at the source code they identify the code that is using the most CPU time. Using disassembler output they determine that creating a new instruction that combines the heavily repeated mul and c.add operations, will improve performance. Another change they evaluate is replacing vector loads with loading bytes using an immediate address increment.

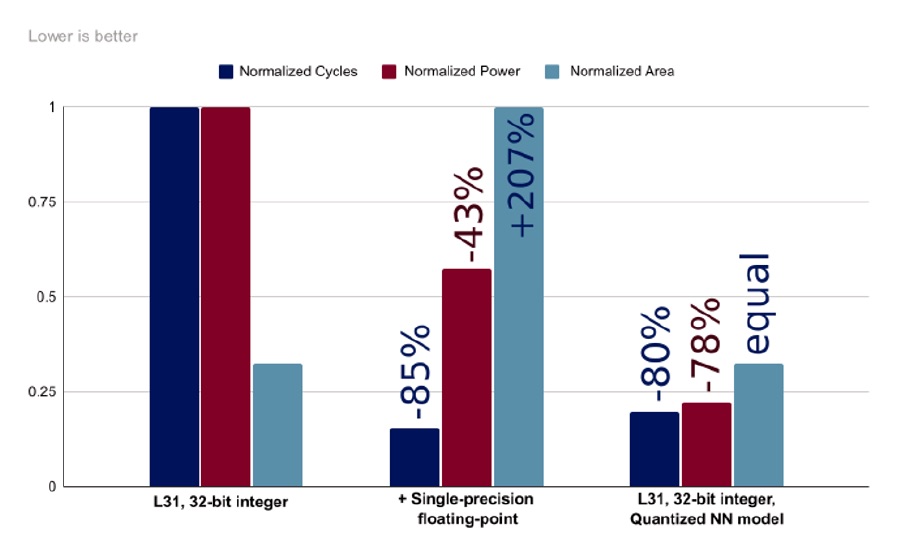

The Codasip Studio profiler can provide estimates of the processor’s power and area. This helps designers choose between standard variants of the L31 core. In this case they explored what the effects of removing the FPU would be. TFLite-Micro supports quantization of neural network parameters and input data. With integer only data the FPU can be dispensed with. Of course, there is a trade off in accuracy, but this can be evaluated as well at this stage of the process. The table below shows the benefits of moving to integer and using a quantized neural model.

The Codasip white paper concludes with a closer look at how the L31 operates in this use case with the new instructions and compares it to running before the instructions were added. Using their software tools, it is possible to see the precise savings. Having this kind of control over the performance of an embedded processor can provide a large advantage in the final product. The white paper also shows how Codasip’s CodAL language is used to easily create the assembly encoding for new instructions. CodAL makes it easy to iterate while defining new instructions to achieve the best results.

To move AI/ML operations to the edge designers must look at every avenue to optimize the system. In order to gain the latency improvements and overall power saving that edge-based processing promises, every effort must be made to make the power and performance profile of the embedded processor as good as possible. Codasip demonstrates an effective approach to solving these challenges. The white paper is available for download on the Codasip website.

Also read:

Getting to Faster Closure through AI/ML, DVCon Keynote

Unlocking the Future with Robotic Precision and Human Creativity

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.